Copilot is getting an overhaul during Microsoft Build. While the Copilot Wave 2 innovations that appeared in preview are becoming generally available, Microsoft is making AI agents more agile. Tuning and multi-agent orchestration should make agentic functions simple and effective.

We discussed the general features of Copilot Wave 2 in September. These features are now generally available, alongside new ‘reasoning’ agents in the form of Researcher and Analyst.

Tuning to perfection

Refining Copilot functionality is central to Build. Copilot Tuning is the name of a new capability that allows you to use business data, workflows, and processes for AI training. Microsoft’s promise revolves around a no-code setup within Copilot Studio for domain-specific, accurate AI deployment. Those who build agents within this studio will not see them leave the 365 service; the data will also not be used by Microsoft for further training.

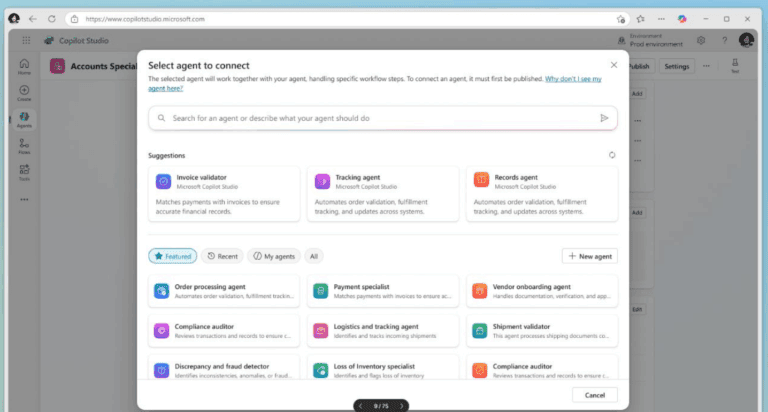

Microsoft cites a prediction that there will be 1.3 billion agents in 2028. It would be a shame not to let them talk to each other, seems to be the tech giant’s reasoning. That is why multi-agent orchestration within Copilot Studio must combine the skills of agents. This should suddenly speed up repetitive tasks within HR, IT, and marketing, such as onboarding a new employee. The feature is currently in public preview, as is the newly announced Azure AI Foundry Models. Read more about that announcement below:

Read more: Microsoft makes Azure AI Foundry available with improved model tools

Existing improvements

As it is the week of Microsoft’s annual Build event, the announcements about its own products contain quite a few summaries of previous innovations. These include computer use, which enables AI tools to control the end user’s PC (albeit to a limited extent). In addition, Microsoft, like virtually all AI players, has jumped on the Model Context Protocol bandwagon, released agent flows to automate tasks, and offers deep reasoning to execute complex business processes.