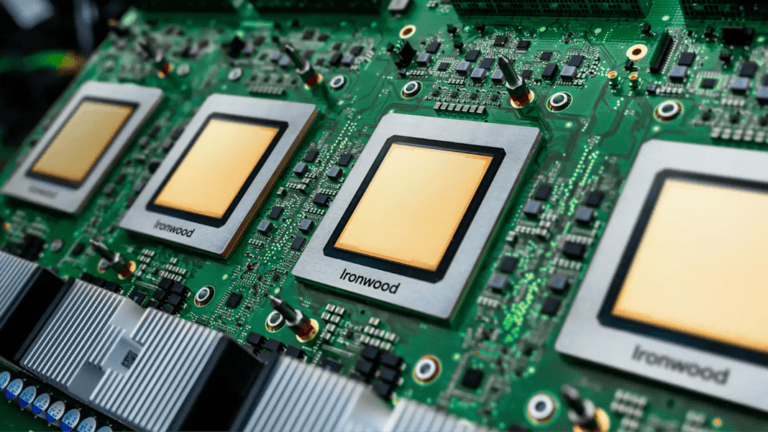

Following an announcement in April, Google is launching its seventh-generation Tensor Processing Unit (TPU). The chip, codenamed Ironwood, can scale up to nearly 10,000 units in a single pod. Together with new Axion instances on Arm architecture, the company promises major performance improvements. Anthropic will be one of the first users with up to one million TPUs.

Anthropic’s commitment is perhaps the most significant achievement of the Ironwood launch. Details about the chip were largely shared during Google Cloud Next in April, such as its fourfold performance improvement over the sixth-generation TPU. Anthropic, creator of Claude, will have more than a gigawatt of capacity at its disposal in 2026.

James Bradbury, Head of Compute at Anthropic, emphasizes that both inference performance and scalable AI training are required. The infrastructure must also remain reliable. Google reports 99.999 percent uptime for liquid-cooled systems since 2020. Optical Circuit Switching reroutes network traffic in case of problems, allowing workloads to continue without visible interruption.

Ironwood as the AI inferencing powerhouse

Ironwood is specifically built for inferencing, which is the actual running of trained AI models. The focus is on speed and reliability, which are crucial for chatbots or coding assistants that need to respond quickly. Ironwood is therefore not explicitly intended to compete with the most powerful Nvidia Blackwell GPUs, which are currently the preferred hardware for AI training.

A single Ironwood pod connects 9,216 chips with an Inter-Chip Interconnect of 9.6 terabits per second. This network provides access to 1.77 petabytes of High Bandwidth Memory. According to Google, this delivers 118 times more FP8 ExaFLOPS than its closest competitor. Compared to its predecessor Trillium, Ironwood offers four times better performance for both training and inferencing. This is in addition to software improvements that end users may have implemented in the meantime.

The hardware comes with new software. Google Kubernetes Engine gets Cluster Director for maintenance and topology awareness. The open-source MaxText framework now supports advanced training techniques. The Inference Gateway optimizes load balancing, reducing time-to-first-token latency by 96 percent and lowering serving costs by up to 30 percent.

Axion expands with new instances

In addition to Ironwood, Google is introducing two new Axion-based instances. The Arm-based CPU focuses on general workloads such as data analysis and web serving. N4A instances offer up to 64 virtual CPUs with 512 gigabytes of DDR5 memory. C4A metal provides dedicated physical servers with 96 vCPUs and 768 gigabytes of memory.

Video website Vimeo saw 30 percent better performance for transcoding workloads compared to x86 VMs. ZoomInfo saw a 60 percent improvement in price-performance ratio for Java services. In other words, Axion is not only faster, but also appears to be significantly more cost-effective than its predecessors.

All of this encompasses Google’s idea of an AI Hypercomputer, which is essentially the sum of hardware, software, and a revenue model designed to make AI deployment as simple and scalable as possible. Google cites IDC research that measured a 28 percent reduction in IT costs and 55 percent more efficient IT teams. To achieve this, Google is undergoing a transformation behind the scenes. Today, the company uses 400-volt DC power delivery for one megawatt per rack, ten times more than server racks in conventional setups.