Huawei opens fire on Google and Nvidia with two proprietary AI chips with a universal Da Vinci architecture. Both chips carry the Ascend name and mark a new path that Huawei is taking to turn AI innovation to their advantage.

The new Ascend AI chips have a universal Da Vinci architecture, so developers only need to program once across multiple applications. Dang Wenshuan, Chief Strategy Architect at Huawei: Da Vinci provides two options: scale-out and scale-in. For example, developers can start small and grow with the same code, or the other way around when you want to scale from the largest scenario to the smallest. The chips offer space for a lot of memory with minimal latency.

Ascend 910

The top product is the Huawei Ascend 910. They want to compete with the latest TPUs from Google and the Tesla GPUs from Nvidia. The chip is baked at 7 nm (presumably at TSMC) and offers up to 256 teraflops of computing power for AI applications. In his presentation, Huawei compares the Nvidia Tesla V100 (125 teraflops) and the Google TPU 3.0 (90 teraflops).

It also offers an Ascend Cluster with 1,024 chips on board, providing 256 petaflops of computing power and 32 TB HBM. By way of comparison, a Google pod with TPU 3.0 chips delivers up to 100 petaflops. The only problem is that Huawei does not make clear what the figure is based on. It is more important to know how precise mathematics is, because brute force is not enough. Huawei will not give any details about this for the time being. Systems with the Ascend 910 will be available in the Huawei hybrid cloud from Q2 2019.

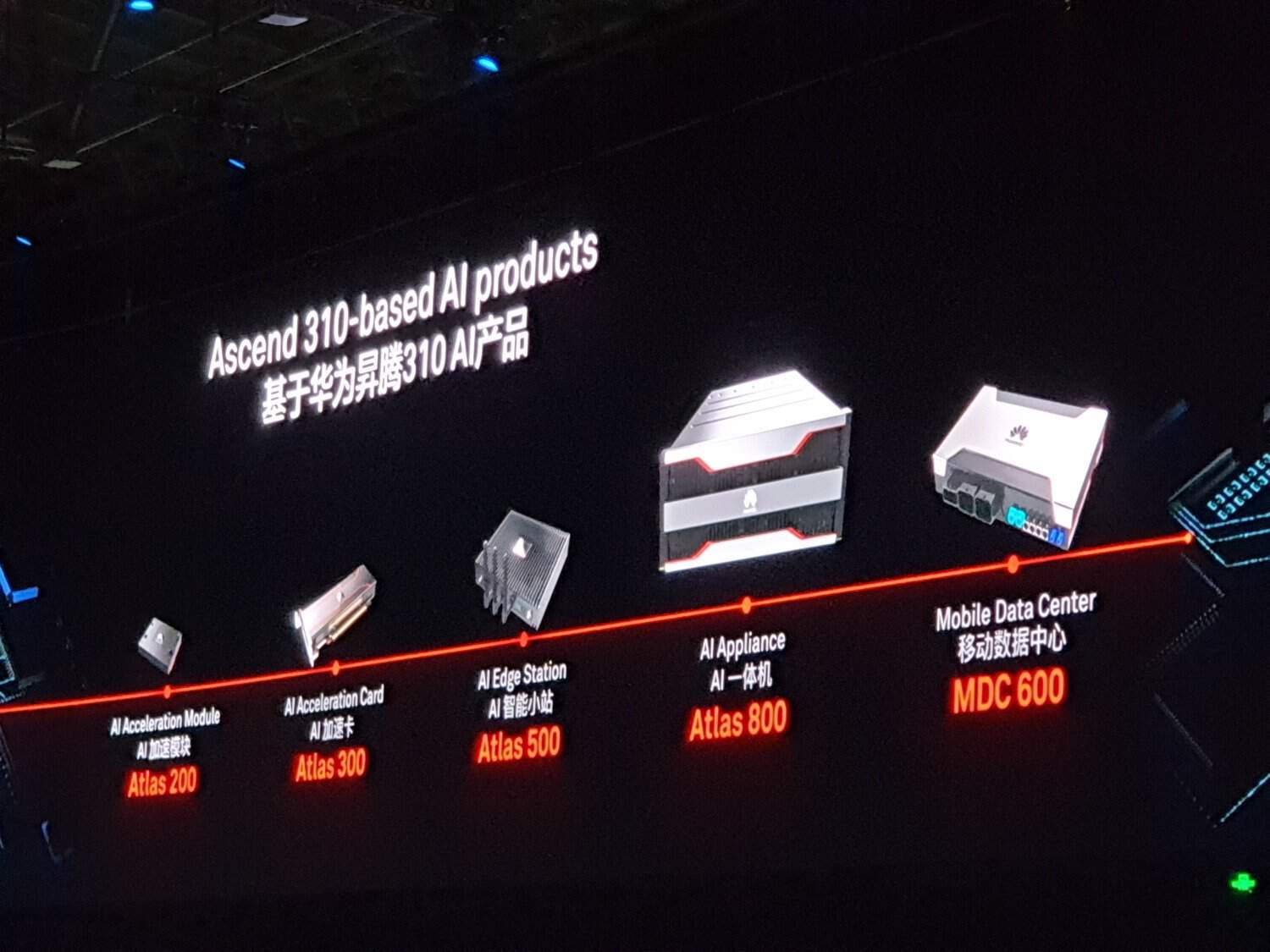

Ascend 310

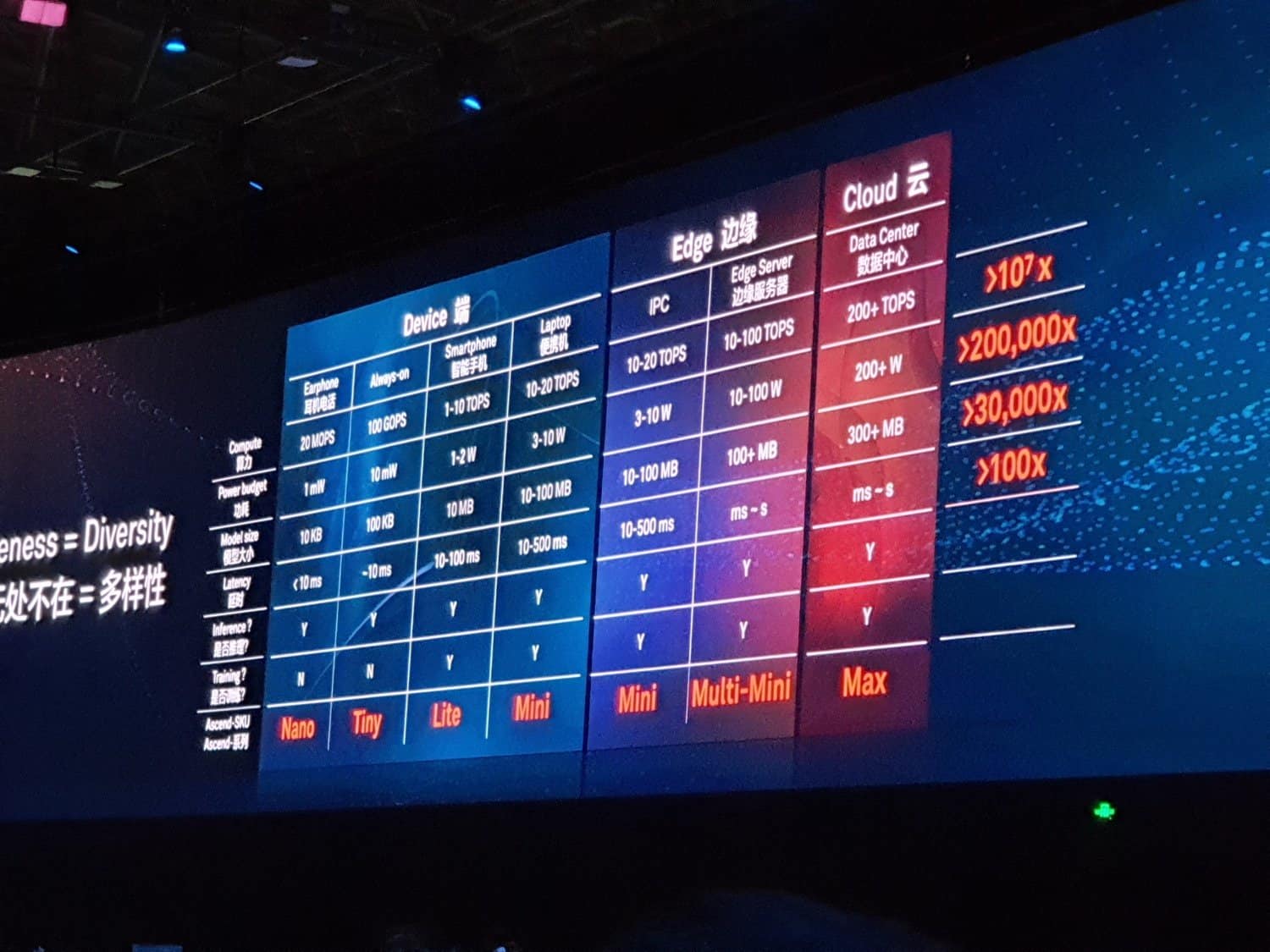

Today Huawei announces the flexible Ascend 310. This AI chip is baked at 12 nm and is now available in various systems. With Ascend 310, Huawei targets a variety of applications from small IoT devices to data centres. For earphones, always-on, smartphones and laptops it launches the Nano, Tiny, Lite and Mini versions ranging from 0.02 to 20 teraflops with a latency of less than 10 ms to 500 ms respectively.

For Edge applications, the Ascend 310 comes in two flavours: Mini and Multi-Mini. The latter combines multiple minis in a compact Edge server with performance of up to 100 teraflops and consumption of up to 100 watts. The Ascend 310 also gets a cloud variant called Max, with 200+ teraflops of computing power.

With Ascend 310, Huawei wants to offer chips for various AI applications. Thanks to different (scalable) variations, you have a minimal cost per scenario. With a universal architecture over the different chips, the developer hardly needs to change his code. Ascend 310 products will be launched this year in five variants with various formats, including an AI acceleration card with PCI Express connection.