Researchers at Microsoft have developed BugLab, an autonomous AI tool capable of detecting errors in programming code. In this way, security incidents can be prevented.

BugLab is an autonomous tool for fixing and detecting programming errors. Autonomous refers to the model used to train the tool. This model is not based on pre-existing data of programming errors and trains itself without supervision instead.

Microsoft used a non-training model because relevant data was lacking. While there’s plenty of source code available for training bug finding models, code errors with comments are sparse.

Self-training with comments programmers

Instead of training with existing bugs, the tool trains itself by running so-called hide and seek games and natural language hints that programmers leave in code comments. Think about variable names.

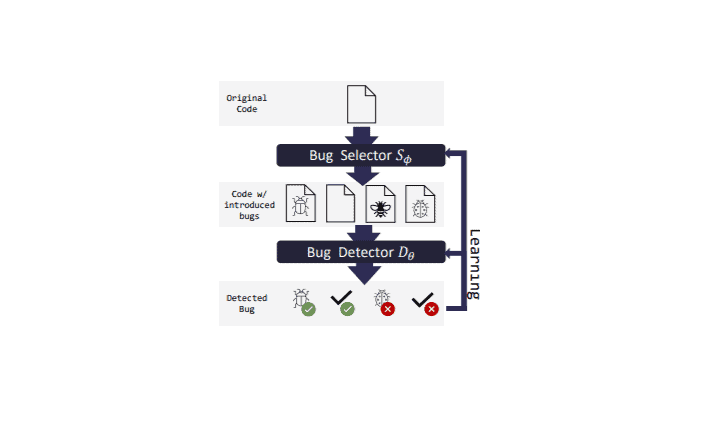

BugLab includes two models: a bug selector and a bug detector. The bug selector model determines whether to create a bug, where to create it, and in what form. Based on these choices, the code is edited to create the bug. The bug detector model then attempts to determine if an error was created in the examined code, fixing it if necessary.

The tool focuses on discovering hard-to-detect errors. The tool does not focus on already known errors, as traditional programming analytics tooling is broadly available for that.

Test case

In a test case, the researchers examined a dataset of 2,374 real-life Python package errors. About 26 percent of these errors were found and fixed automatically. In addition, the tool discovered 19 new unknown bugs.

However, Microsoft notes that its models also created many false positives. As such, practical usage is, for now, out of reach.