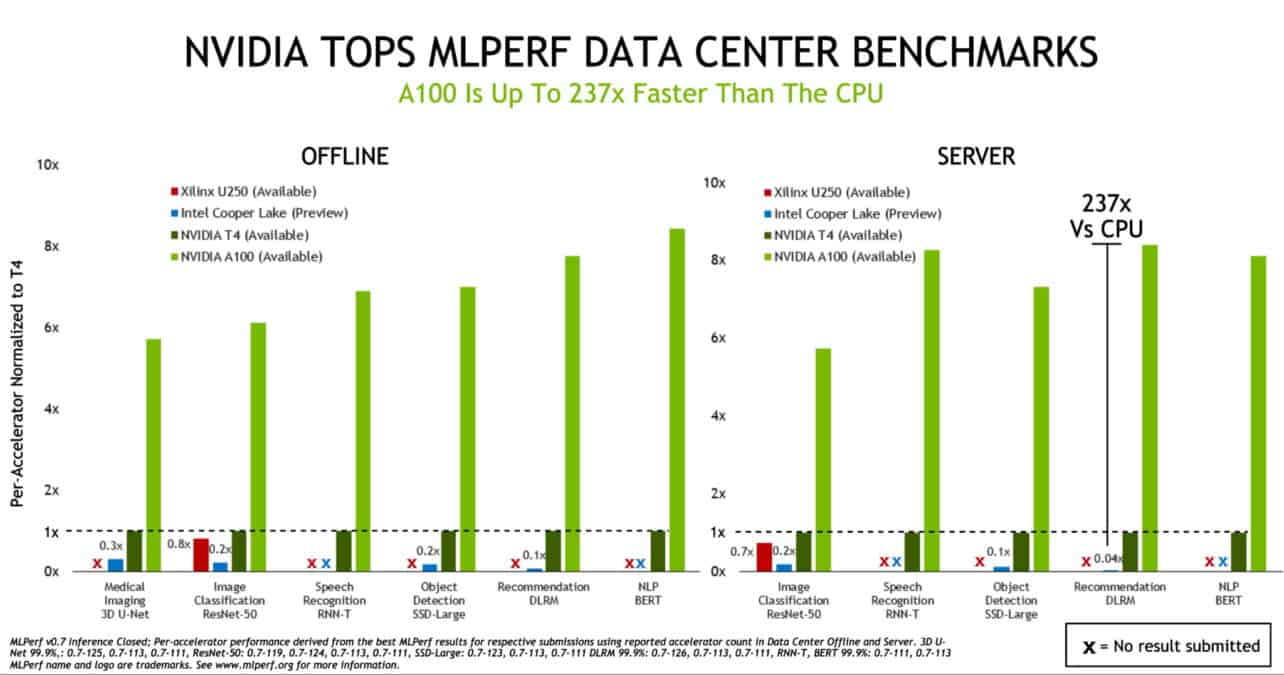

Nvidia’s A100 GPGPU has broken several records in the MLPerf benchmark for machine learning applications. The new chip performs roughly six to eight times better than its Turing-based predecessor.

In the MLPerf benchmark, the A100 GPGPU was compared to the Nvidia T4, as well as the Xilinx U250 and Intel’s Cooper Lake processors. The A100 performed best in each of the tests.

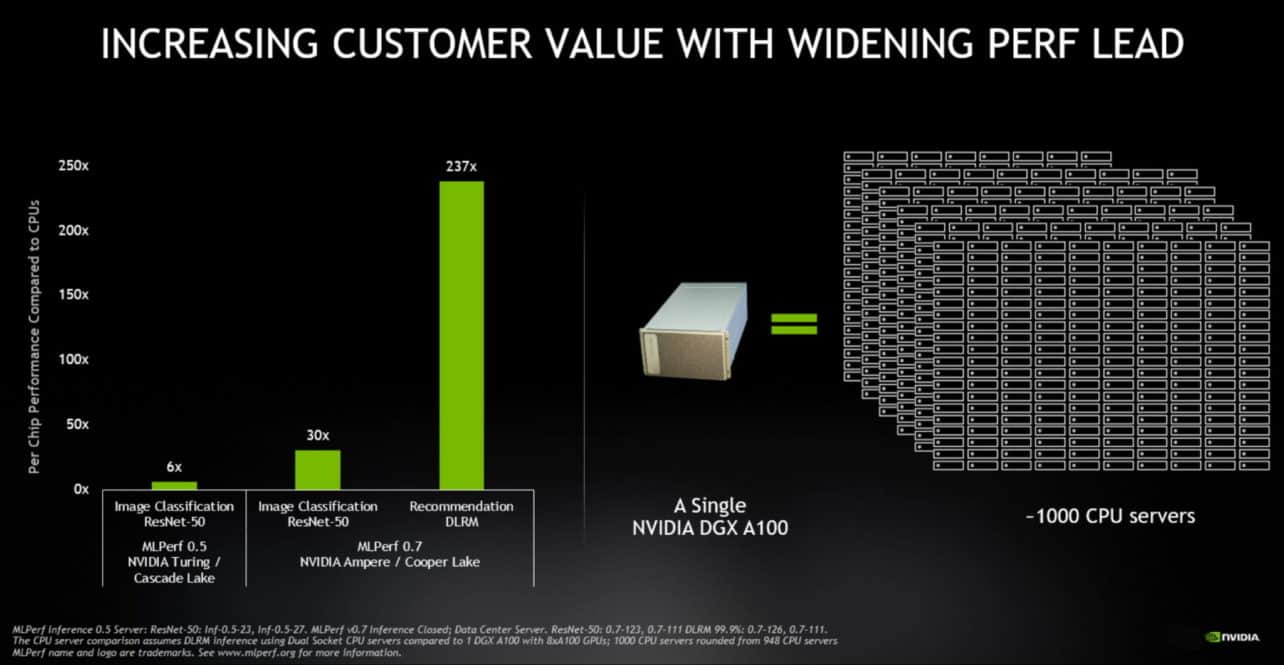

Nvidia likes to compare these results to the CPU’s from Intel, as shown in their blog post. Compared to those processors, the new graphical processor from Nvidia performs up to 237 times better. The chip designer indicates the one Nvidia DGX A100 system, equipped with eight A100 chips, is nearly as fast as a thousand dual-socket servers with Intel processors.

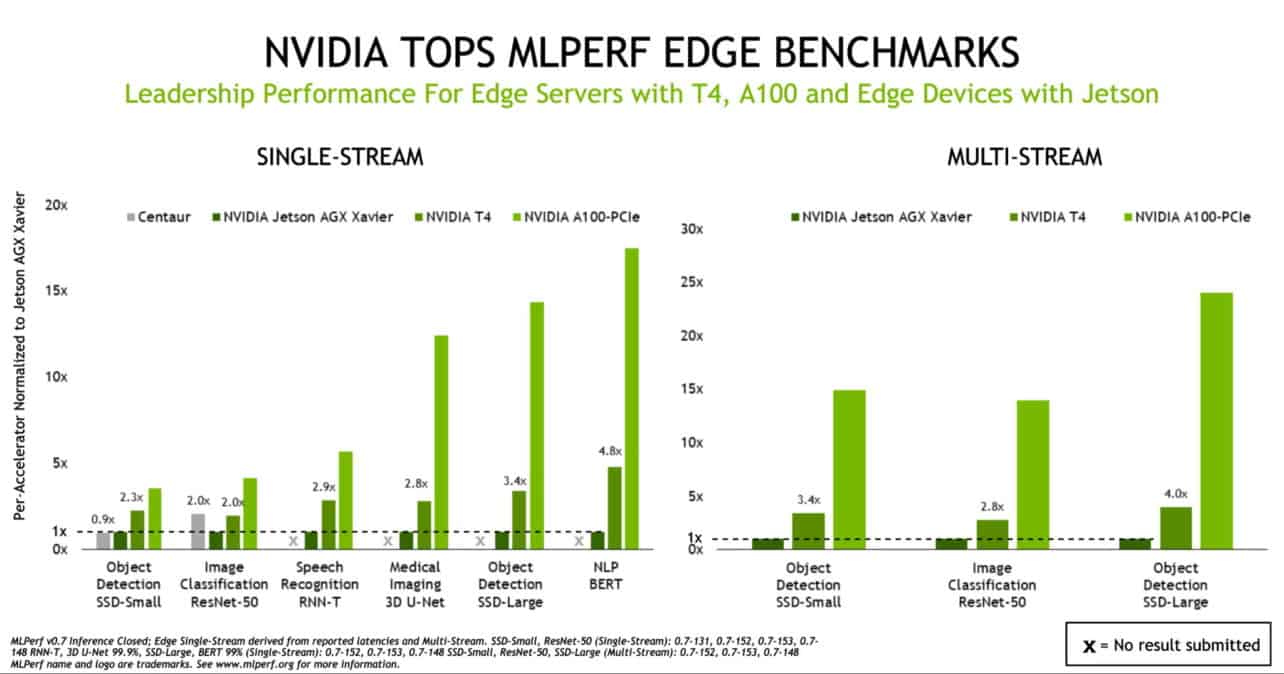

The speed of the A100 chip is also reflected in the MLPerf edge benchmarks. Again, the A100 performs much better than the T4, as shown in the table below. The Jetson AGX Xavier chip, which consumes only 10 watts, also shows impressive performance.

MLPerf

MLPerf was released in 2018 to test how well different systems perform in various AI applications. It’s backed by multiple parties such as Amazon, Baidu, Facebook, Google, Microsoft and the universities of Harvard and Stanford.

The Nvidia was launched in June. Several organisations have implemented the GPGPUs in their servers. Google Cloud started renting out servers equipped with the technology in July.