There appears to be a method for checking whether an image was generated by AI. Remarkably, the method is already being used for scientific research into space.

Technological advances have made it almost impossible to recognize AI-generated images. For this reason, cybercriminals use deepfakes, in which images of real persons are generated, in social engineering activities.

Difference in light reflection

Researchers at the University of Hull have recently found a way to recognize the hoax after all. This method looks at the light reflected in the eyes of the people pictured. The same method researchers also use to study galaxies.

The principle assumes the natural reflection of light in the eyes. In doing so, the light reflection in each eye will take the same shape. The researchers found that AI does not take this into account, so the light reflection often takes a different shape in each individual eye.

For the study, an automatic tool was developed that checks whether or not the reflection in the eyes is natural. The test result comes in a Gini score, where a score toward zero indicates a natural reflection and a score toward one could be deception.

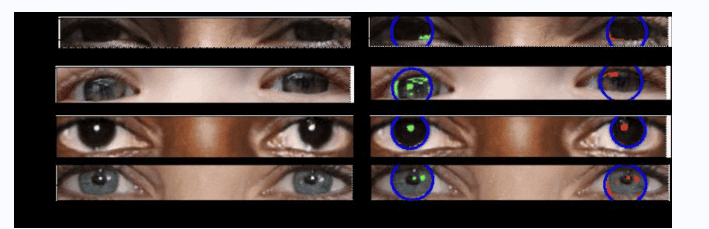

These images were created by AI. The light reflection in the eyes does not follow the same pattern in the left and right eyes. Source: Adejumoke Owolabi

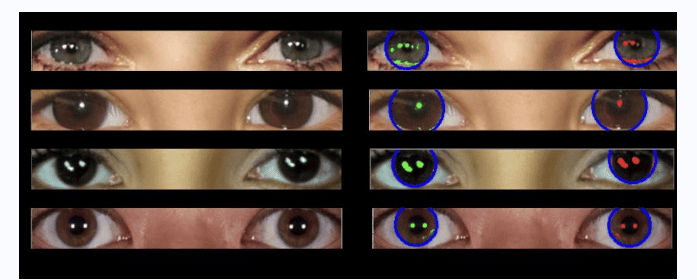

These are pictures of real people. There is clearly the same pattern of light reflection in both eyes. Source: Adejumoke Owolabi

The basis for further improvement

The method presented is not completely accurate and can produce false positive or false negative results. In addition, there are limits to the method as it requires a clear close-up of the eyes.

AI image generators can also take the research into account to make images more realistic in the near future. In turn, researchers can use the research to further reflect on detection tools. There is currently only a very limited range of tools available, often with limitations.

Also read: Fraud with deepfakes: how can an organization protect itself?