McAfee wants to fight deepfakes more strictly. With the introduction of Project Mockingbird, the security company will focus on combating (by generative AI) generated deepfake audio files, such as voices.

These include deepfakes of the voices of famous people, such as newscasters. In this way, McAfee hopes to protect the public from fake news, counter scams and influence public thinking.

Underlying technology

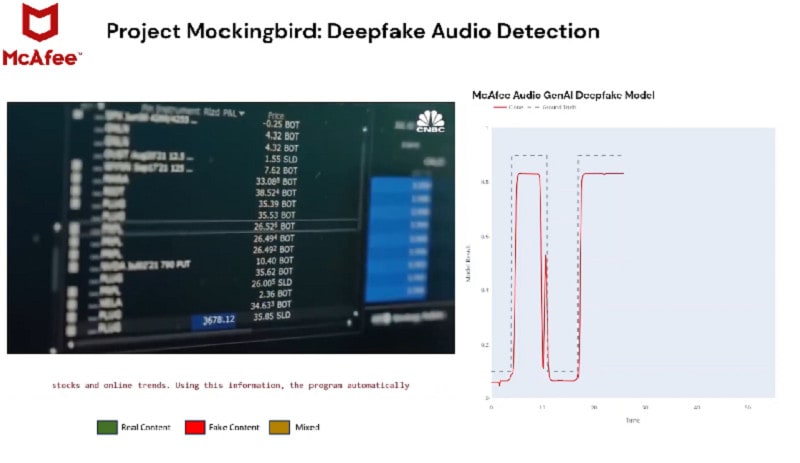

More specifically, Project Mockingbird includes the security specialist’s self-developed, AI-supported Deepfake Audio Detection technology. This technology detects whether audio actually comes from a human person or not based on listening to the words spoken.

The technology uses raw audio data from a video, for example, and feeds it into a classification model. Based on dangerous properties, this model determines whether this data falls into a specific category. Then, based on McAfee’s training data, the model determines whether AI generated this (audio) data or is actual content.

McAfee has been using this specific classification method to detect malware or identify Web content. Instead of files, it screens all specific data based on all data rather than files.

According to McAfee, the technology could determine up to 90 per cent accurately whether audio data is fake.

Recent research on deepfakes

McAfee has long warned against deepfake technology that could harm society. A U.S. survey conducted in December 2023 found that 68 per cent of American respondents fear the consequences of deepfakes. About one-third, 33 percent, say they have already encountered deepfakes.

Respondents’ top concerns about the impact of deepfakes include: influencing elections (52 per cent), cyberbullying (44 per cent), undermining trust in the media (48 per cent), impersonating celebrities (49 per cent), creating fake pornography (37 per cent), obscuring historical facts (43 per cent) and engaging in cyber scams by criminals to obtain financial or personal information (16 per cent).

Also read: ElevenLabs takes small step toward combating audio deep fakes