EPAM is building its DIAL platform to become one of the most advanced enterprise AI orchestration systems in operation. With its recent DIAL 3.0 release, it addresses how to harness AI at scale without sacrificing governance, cost control, or transparency. We spoke with Arseny Gorokh, VP of AI Enablement & Growth at EPAM, about the platform.

DIAL might not be the most known technology out there, but it has some history to build on. When ChatGPT launched in late 2022, EPAM, like many technology providers, found itself in uncharted territory. With nearly 56,000 engineers, the company saw immediate enthusiasm among employees for experimenting with generative AI. Yet this enthusiasm also created significant risk. Without oversight, compliance controls, or cost management, unchecked AI usage could quickly spiral into a liability.

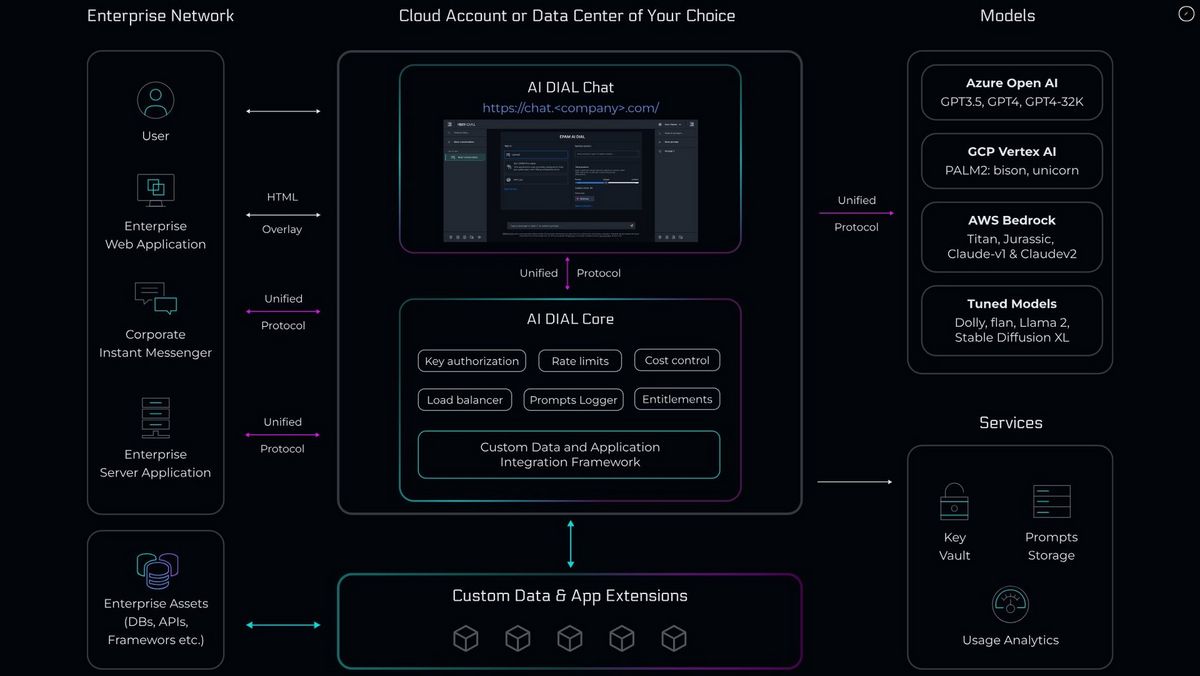

This challenge gave rise to the first version of DIAL, short for Deterministic Integrator of Applications and Language models. Initially, it served a straightforward purpose: to act as middleware between employees and language models, enforcing governance rules and ensuring responsible use. As Gorokh recalls, they quickly realized that they should not rely on employees experimenting with ChatGPT without proper oversight, and a framework had to be in place to manage usage responsibly.

Why open source became the foundation

From the beginning, EPAM understood that keeping pace with the velocity of AI innovation would not be possible with a closed, proprietary approach. Instead, the company decided to open source DIAL, recognizing that every week brought new announcements in the AI space and that attempting to build everything internally would mean falling behind within weeks. Open sourcing offered two important advantages. It allowed the platform to evolve faster by drawing on contributions from clients and partners, and it reduced vendor lock-in for organizations that wanted the flexibility to choose their own model strategy.

One client developed functionality to run small language models entirely on local infrastructure, which was critical for processing highly confidential information that could not leave company premises. By contributing this back into the platform, other enterprises with similar requirements could benefit immediately, highlighting how open-source collaboration accelerates adoption while addressing specific industry needs.

From simple control to orchestration

The second major milestone in the platform’s evolution was DIAL 2.0, which expanded its role beyond governance and access control. This version introduced guardrails, routing mechanisms, and tooling for building applications on top of language models. But the real leap came with DIAL 3.0, which addresses a much more fundamental enterprise dilemma: how to select the right model for the right use case.

Rather than locking organizations into a single provider, the platform now supports multi-model orchestration. It can route queries simultaneously to commercial services such as OpenAI or Gemini, as well as to locally hosted open-source alternatives. This enables the benchmarking of performance, latency, cost, and accuracy in real-world conditions. According to Gorokh, DIAL provides the backend that allows enterprises to assess efficiency, costs, latency, and user feedback across different models, allowing them to make informed decisions based on actual data rather than marketing claims. For European organizations, the orchestration layer also supports compliance with strict data residency and sovereignty requirements by ensuring that sensitive queries remain within local data centers while less sensitive tasks can be routed to commercial providers for optimal performance.

Business value through knowledge interaction

Perhaps the most significant change in DIAL 3.0 is its shift from chatbot enablement to deep business integration. EPAM’s vision now revolves around the idea of “real talk to your data,” meaning the platform is designed to enable direct interaction not just with unstructured text but also with structured enterprise information. Traditional knowledge management approaches, such as knowledge graphs, are being reimagined with generative AI at the core, creating new possibilities for how enterprises can navigate and utilize their information assets.

The system can work with massive datasets, distinguish between structured and unstructured data types, and orchestrate workflows across multiple AI agents and models. The result is that knowledge workers can interrogate data repositories directly, executives can test ideas with AI-assisted insights, and enterprises can embed generative AI into critical workflows without losing transparency or governance.

Testing the limits of large models

At the same time, EPAM emphasizes that language models are not treated as infallible sources of truth. On the contrary, the importance of orchestration stems from the fact that raw outputs often remain unreliable. As part of reliability testing for one of our customers, consumer-grade large language models showed consistent inaccuracies when answering country gross domestic product (GDP) queries, including misidentified countries, mismatched years, and unreliable statistical outputs. Gorokh notes that models often fall short of providing fully correct answers, which is why DIAL focuses on adding routing, validation, and assessment mechanisms. When new model versions are released, the platform’s monitoring capabilities quickly evaluate their improvements in terms of cost, accuracy, and latency, and they are integrated into the orchestration layer only if they deliver measurable gains.

Today, DIAL operates at a scale that few enterprise AI platforms can claim, Gorokh explains. It supports tens of thousands of active users across dozens of languages and integrates a wide range of models. This operational scope provides EPAM with valuable insights into adoption patterns, revealing which industries are progressing rapidly, which use cases deliver real business value, and how cost optimization strategies are implemented in practice. Its modular architecture enables rapid integration of new technologies, as demonstrated when DeepSeek’s Chinese model launched. Within days, DIAL had the model operational, allowing EPAM’s leadership and clients to experiment with it immediately, ensuring that innovations in R&D were treated as a priority above other considerations.

Shadow spending and environmental costs

As the platform has scaled, it has also revealed a less visible consequence of AI adoption: shadow spending and environmental costs. Gorokh is candid about this, explaining that during the first six months of deployment, most employee queries were not connected to work at all but were about recipes, school supplies, and everyday matters. When this type of behavior is multiplied across thousands of employees, the costs quickly escalate, both in terms of financial spending and environmental impact. Governance and transparency, therefore, become essential, not optional, in ensuring that enterprise AI deployments are sustainable and aligned with business priorities.

The driver of trust

Many organizations are turning to open-source AI agent platforms precisely to address these issues. Platforms licensed under a permissive Apache license that give enterprises full visibility and control over the final product. Unlike proprietary black-box solutions, they provide the transparency that enterprises need to maintain control and accountability. Gorokh argues that this transparency also builds confidence, since leaders who understand how AI works under the hood, meaning how models are selected, where data is processed, and what the cost implications are, are more willing to expand deployments into critical business operations.

Several enterprises are already using DIAL and related technologies to modernize knowledge management. A global insurance company, Gorokh notes, is leveraging AI-powered mind mapping to structure 50 years of unstructured documents. By combining knowledge graphs with generative AI, they are rethinking how corporate memory can be accessed and applied. Gorokh highlights that these companies are not just consumers but contributors, creating new developments, building on existing concepts, and giving back to the open-source community, which allows them to move faster and remain connected to a wider ecosystem.

The road ahead

Looking forward, the technical direction of DIAL and similar platforms is likely to shift toward distributed architectures rather than centralized systems. Instead of routing every process through a single model, enterprises will be able to split workloads across multiple agents that communicate with one another through peer-to-peer protocols. Gorokh describes this as the logical next step, where organizations are empowered to build lightweight agents connected to more sophisticated servers and make choices about where different components of processing take place. That flexibility is crucial when balancing regulatory requirements, performance goals, and cost optimization.

DIAL’s journey from governance safeguard to orchestration engine mirrors the evolution of enterprise AI itself. Where the initial priority was to control employee access to ChatGPT, the pressing need now is to manage dozens of models, ensure compliance with sovereignty regulations, minimize costs, and produce tangible business outcomes. By embracing open source, distributed architectures, and transparent orchestration, EPAM has positioned DIAL 3.0 as a platform for enterprise-scale AI. For Gorokh, it’s clear that the future of enterprise AI is about orchestrating multiple models responsibly, transparently, and in ways that deliver real business value.

Tip: Llama 3.1 is the largest model: turning point in open source AI?