At the annual Computex in Taipei, Intel made a big splash with the debut of Lunar Lake. Unlike its predecessor, this generation of laptop chips meets Microsoft’s newly minted requirements for the AI PC. How much is that worth in 2024? Is Lunar Lake a step toward the AI PC being indispensible?

Intel knows full well what every tech journalist is thinking about during its Tech Tour in Taiwan: Copilot+ and Microsoft’s “full-fledged” AI PC equipped with a Qualcomm Snapdragon X Elite. Recent announcements regarding these products have already been Intel’s second kick in the backside in a few months. As evidenced by Microsofts minimums for the kind of performance an AI PC must deliver, it already became clear that these were a tad higher than what ‘Chipzilla’ was thinking about when it launched Meteor Lake.

Meteor Lake, the name for the architecture behind the first Intel Core Ultra chips from late last year, we emphatically described as the promise of the personalized AI PC. Ultimately, that promise suggests a computer that wants to know everything about you and can even think of what you want to do before you’ve even made such a desire explicit. Intel notes that there are already 8 million Meteor Lake laptops on the market under the Core Ultra name, although their actual AI competencies are of a questionable nature. The minimum target of 40 TOPS (trillions of operations per second) set by Microsoft wasn’t reached, not even when artificially bundling the powers of the CPU, GPU and NPU.

Promises are there to be kept

At any rate, the AI PC promise hasn’t been converted into much AI PC bliss. Intel appears to be acutely aware of this. As put forward by Rob Bruckner, CVP and CTO, Platform Engineering Group, Intel thinks we are only in the middle of the first AI wave. That first wave was defined by a processor family that was more energy efficient than before thanks to added AI smarts. More front-facing AI skills were limited to workloads like noise reduction for audio and webcam image upscaling, both applied in Zoom, for example.

None of this yet offered the AI revolution that was often suggested. Those suggestions, by the way, came mainly from OEMs like HP and Dell who wanted to extricate themselves from a repeat of the disastrous 2022 and 2023 sales figures, not the chipmakers themselves. Intel, on the contrary, was and is quite level-headed about the fact that the AI PC is in its early formative years. Eventually, we will reach a point where we will simply be talking about the PC again, without the AI prefix.

At the moment, however, highlighting AI is important, precisely because it is still very much in development. Thus, Bruckner promises that we can expect much more from the second wave of AI. In conjunction with Copilot+, this phase of development offers full-fledged AI ‘agents’ and new opportunities for real creative collaboration with AI tools for the first time. That can sometimes have somewhat creepy privacy implications as with the Recall feature in Copilot+, but real-world sales figures should show whether consumers are actually all that bothered by that. For now, Northwestern Europeans in particular seem to be asking irritating questions about what this all means for end users’ data privacy.

Tip: Windows Recall feature works on older Snapdragon PCs

The Lunar Lake proposition

With Lunar Lake, Intel boasts about 120 TOPS, of which 48 are from the NPU, a touch above the 45 found inside of the Snapdragon X Elite introduced with Copilot+. A total of 120 of these TOPS can be found inside Lunar Lake’s microarchitecture from the sum of GPU (67), NPU (48) and CPU (5). After being heavily criticized for Meteor Lake’s half-baked specs for AI workloads, these numbers represent a much-needed step up for Intel to remain competitive.

In terms of P-cores, the overall improvement over Meteor Lake is 14 percent. However, the Skymont E-cores outperform their predecessor with, on occasion, 38 percent, in one benchmark even exceeding Meteor Lake by 68 percent. Still, Intel again emphasizes efficiency: the same performance on the CPU now costs half the power it did with Meteor Lake.

The NPU has made an absurdly large generational gain. It has gone from just above 10 TOPS right to 48. Impressive, but not a feat that is to be repeated anytime soon, according to Senior Director of Technical Marketing Robert Hallock. The NPU, brought to client chips from the embedded IoT world, has apparently now become fast enough for most efficient AI background tasks with this fourth generation of the NPU (NPU 3 was in Meteor Lake). Further TOPS increases should instead be expected with the GPU, which is more about top performance than persistent background AI workloads. To give some examples: the NPU may continuously deliver AI corrections in Word text, while the GPU gets fired up when you want to convert a huge Excel file into a complex graph. In this way, Microsoft’s 40 TOPS no longer seems like an arbitrary choice: it currently seems to be the sweet spot for sufficient performance with high efficiency.

x86 is not dead yet

During Intel’s presentation, the term “x86” pops up remarkably often. Lunar Lake is “finally putting an end to the myth that x86 is less efficient than Arm,” according to Michelle Johnston Holthaus, EVP and GM, Client Computing Group. We later refer back to this to Intel Fellow Tom Petersen and describe it as a claim that x86 isn’t about to die. He is somewhat startled by the phrasing, but emphasizes that we don’t yet know whether AI is going to call for a specific ISA (Industry Standard Architecture). “AI currently happens a layer above the ISA and is in your entire stack.” Is change needed? We don’t know yet, as AI hasn’t standardized enough.

However, the overt dependence on cloud computing must come to an end, and not just because it’s convenient for users. On-device AI for virtually all inferencing is inevitable, Petersen says. The energy costs of running the cloud will be beyond the reach of even AWS, Microsoft and Google long-term. For training foundation models, they’ll stick to the cloud, which simply requires the most powerful AI hardware available. “The real question is where inferencing is going to take place,” Petersen said.

“My assertion is that there is a inexorable economic push to [get AI to] the edge and client, because no service provider wants to keep paying a lot of money for inferencing in the cloud when it can also be done on the client, which is free to them.” Client will (have to) provide enough performance to do AI inferencing, according to Petersen. That requires not only speed, but also efficiency. Nobody wants to run AI locally if it makes your battery life horrible.

Powerful cores

Lunar Lake offers both a new design for its performance core (p-core) as well as its efficiency core (e-core), going by the names of Lion Cove and Skymont, respectively. The central part of the architecture, the compute tile, contains 4 of these p-cores and 4 e-cores. Note that today, each core has one thread – hyperthreading is gone. Due to higher core counts, this should not be a performance problem. When it comes to single-core performance, according to Johnston Holthaus, none of the Arm offerings will come close.

This hybrid core architecture has existed since Alder Lake on desktop from 2022. Its functionality rests on a close relationship with Microsoft, which tinkered a lot with the Windows scheduler to make optimal use of the different cores. Meanwhile, workloads prefer to run first on the e-cores, until the requirements are too hefty and the p-cores come into play.

For the end user, it would not be noticeable in terms of latency when switching between these quartets of cores. In fact, when the GPU and NPU are hard at work, sometimes the e-cores perform better under pressure than the p-cores. The chip also “knows” this through significantly improved onboard telemetry. This is why Intel advises developers against building so-called hard affinities into their software, a mechanism to force the hardware to address the p-cores. As mentioned, that can actually be counterproductive. With “OS containment,” Windows and Intel also ensure that certain workloads do not switch to p-cores unnecessarily. This results in a 35 percent reduction in consumption within Microsoft Teams, for example. We haven’t heard of other examples, but the message is clear: this approach should help with all light workloads.

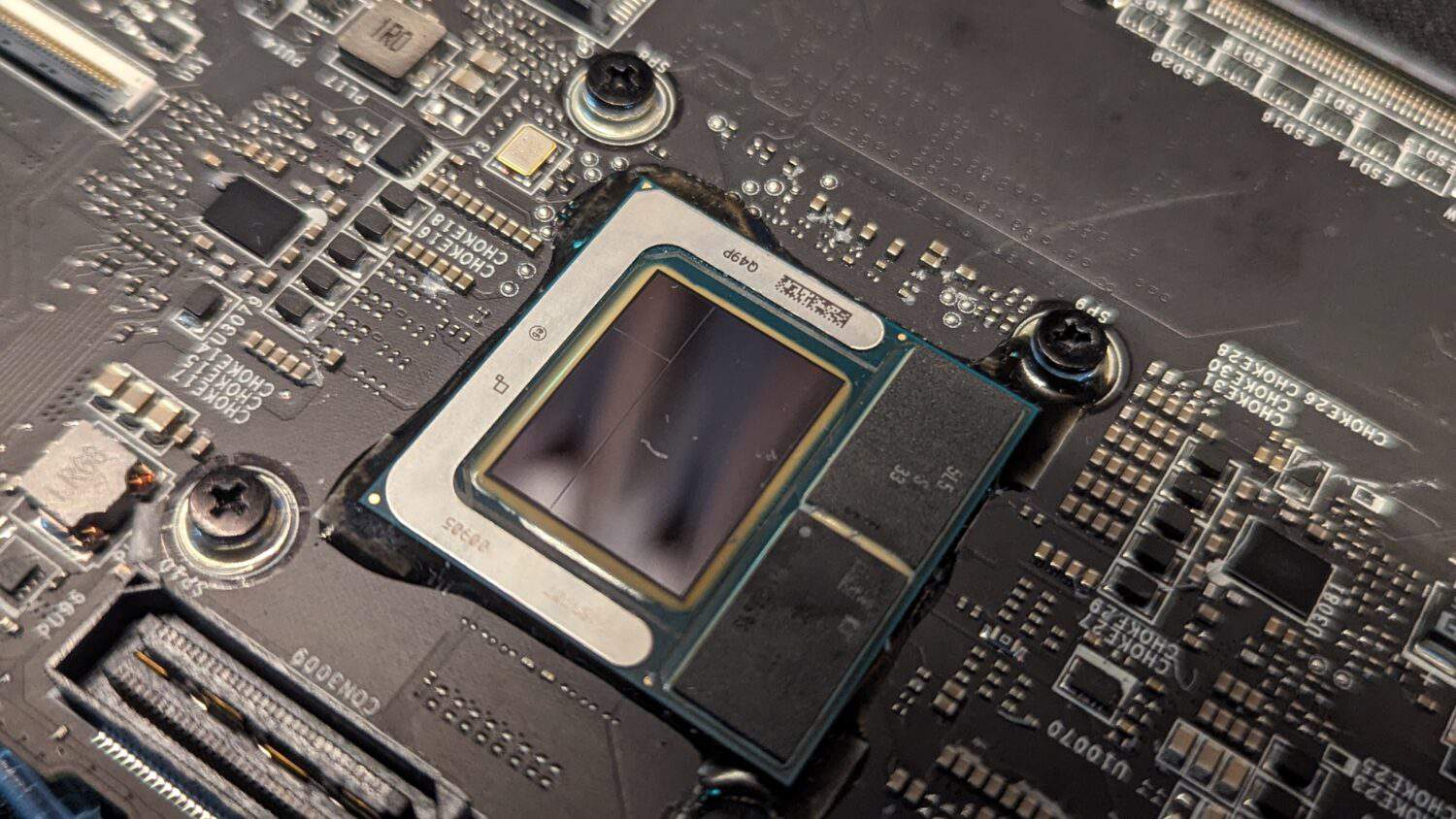

Intel’s approach to Lunar Lake is significantly different compared to before, even though it is a further development of Meteor Lake. For example, for the first time, there’s system memory on the package itself, which puts LPDDR5X in direct connection with the processor. It’s a similar choice to what Apple went for with its M1 chip. For AI, where memory bandwidth is crucial, this is a logical choice. It avoids unnecessary bottlenecks for AI inferencing that would have negated improvements elsewhere.

Other more conventional feature improvements are also of note. Wifi 7 has found its way to Lunar Lake, with all its advantages. There is also Bluetooth 5.4 support and Thunderbolt 4.

Read about this: Intel and Broadcom achieve WiFi 7 speed of over 5 Gbps

Eager aggression

That’s all well and good, but where will Lunar Lake land? Will this be the same story as Lakefield, Intel’s first hybrid chip architecture with the advanced Foveros packaging? Processors of that nature appeared in only 2 actual products. By Q3 2024, we should be expecting around 80 products to feature Lunar Lake. Think thin-and-lights that come with a hefty price tag. They’ll be niche, high-end options.

Yet there is more to the story. Meteor Lake, which also did not replace all previous Intel laptops (for example, it had a lower IPC than predecessor Raptor Lake), was a similar story. In it, Redwood Cove p-cores and Crestmont e-cores debuted, which have since found their way into Intel Xeon 6. The latest server chips are launching on a proven architecture.

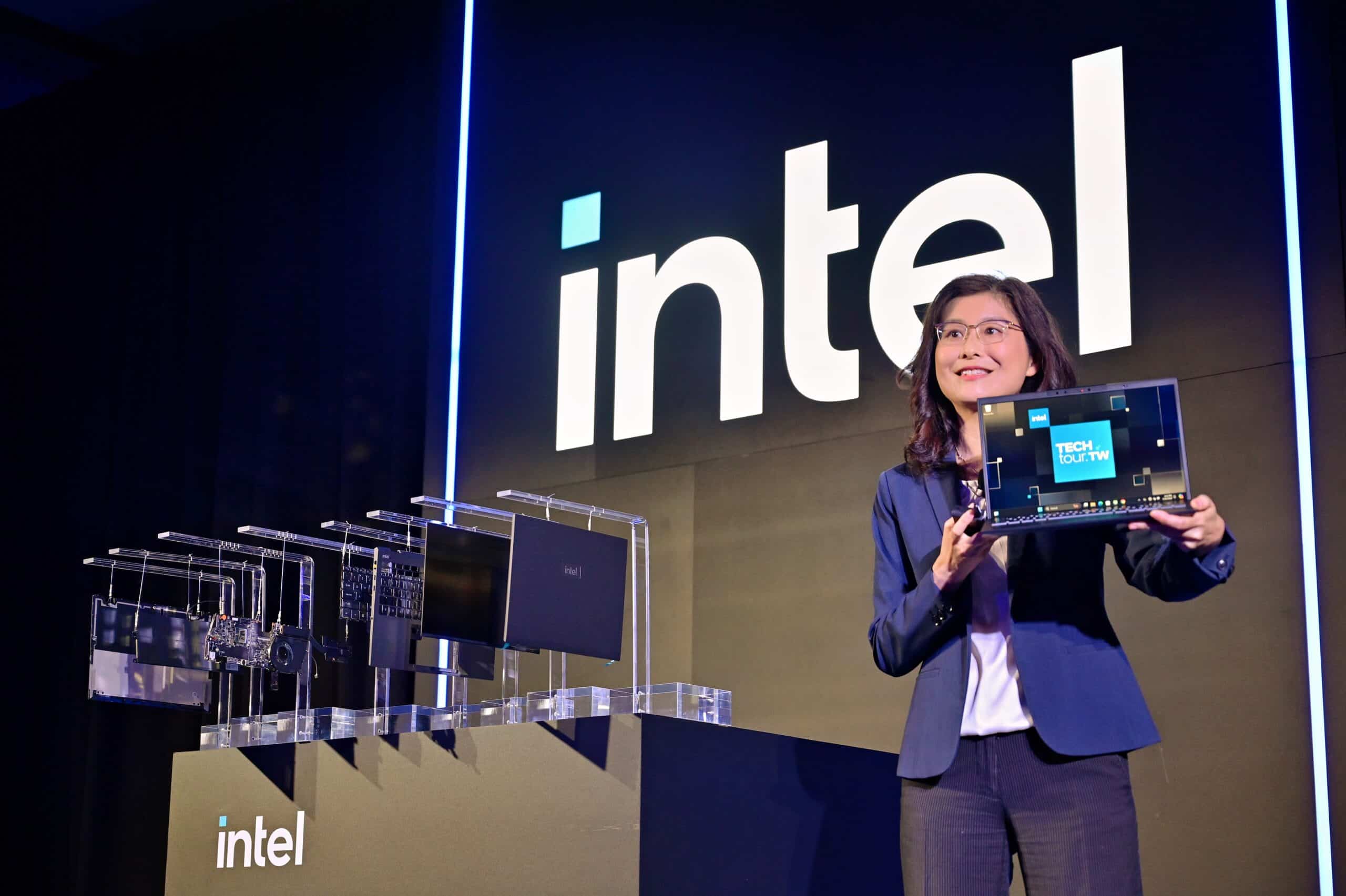

The Tech Tour keynote reveals a striking attitude from Intel, which one may describe as eager aggression. In the eternal duel between Intel and AMD, neither side is averse to a game of mud-slinging, but this time Intel is articulating its competitive position against its multiple challengers with precision. Johnston Holthaus first wants to see if Qualcomm, AMD (and perhaps next year Nvidia) can actually make waves in the market in the first place. “Microsoft needs Intel to be able to introduce AI at scale,” she observes. Lunar Lake more than meets the Copilot+ requirements and thus will appear with Recall, among others, in the third quarter of this year. That Microsoft now has a renewed focus on Arm does not change that.

We would like to see more of this attitude at Intel. As the overwhelming leader in terms of market share on laptop and desktop, the company is capable of bringing about real change to the tech industry. It did that before with ultrabooks, resulting in converting powerful laptops from unwieldy bricks into manageable, sleek products. Now, it cites its own scale as an essential driver of AI development. If there really is an AI PC revolution, it cannot happen without Intel. That, when faced with rivals Qualcomm, AMD and (possibly in 2025) Nvidia, is what the company is stating in plain language this time around.

Also read: Intel and Nvidia have radically different visions for AI development