New hardware announcements, collaboration with Google around Vertex AI and updates to ONTAP AI at NetApp Insight 2023.

If there’s anything NetApp wants to achieve, it’s Unified Data Storage. This has several facets, but includes ensuring that all storage within an organization, whether on-prem or in one of the major hyperscalers, is centrally managed through ONTAP. In doing so, it doesn’t matter what protocol you use or what type of storage it is (block, file, object).

NetApp ASA C Series

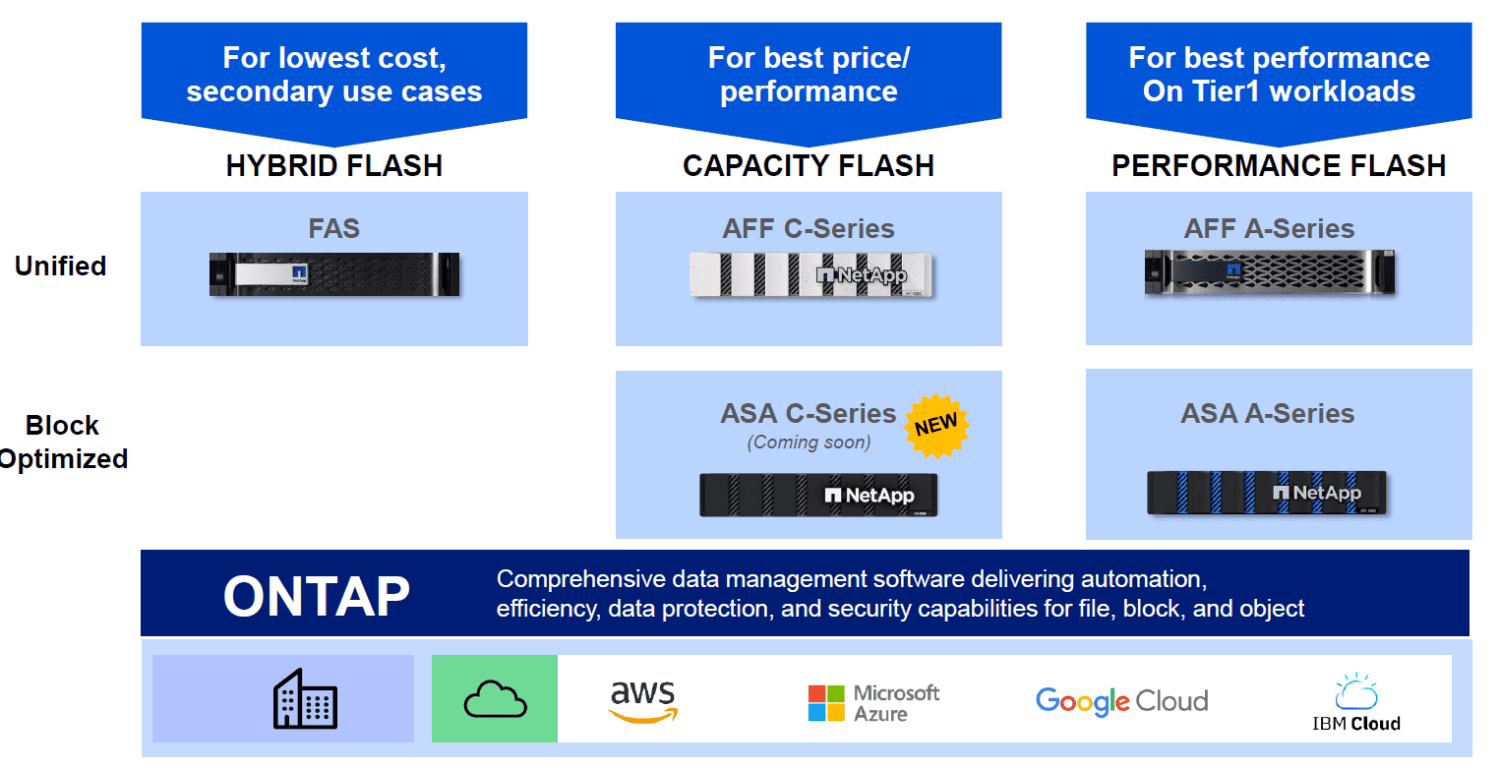

These are ambitious goals, and NetApp works hard to achieve them. Earlier this year, NetApp announced an all-flash SAN array, the new ASA A series. SAN may not be something NetApp is necessarily known for, but the market demands this type of storage, which makes it important to NetApp. NetApp optimized the new series for block workloads. It will position it alongside the FAS and AFF lines. Those are the so-called unified product lines, where block and file come together. ASA is explicitly optimized for block workloads, which seems to go against the unified ambitions of NetApp. However, the ASA line uses the same APIs, the same OS (ONTAP) and the same management environment. In that sense, ASA is part of NetApp’s unified approach.

With the ASA A Series, NetApp is targeting Tier 1 workloads that require a latency of less than 1 millisecond. Of course, that’s not necessary for all workloads. Hence, today it is announcing the ASA C Series. This is also an all-flash SAN array for block workloads, but aimed towards workloads that can also do just fine with 2-4 milliseconds latency. That makes the C-Series a lot more affordable than the A-Series. It allows more organizations to make the move to all-flash. This is certainly not just about DR as a workload, by the way; VMware environments can also run excellently on it, as well as databases for important workloads for which extremely low latency is not a requirement.

Below you can see NetApp’s offerings following the introduction of the new ASA line. Good to mention here is that for now NetApp is not going for just all-flash arrays. The FAS line still uses HDDs.

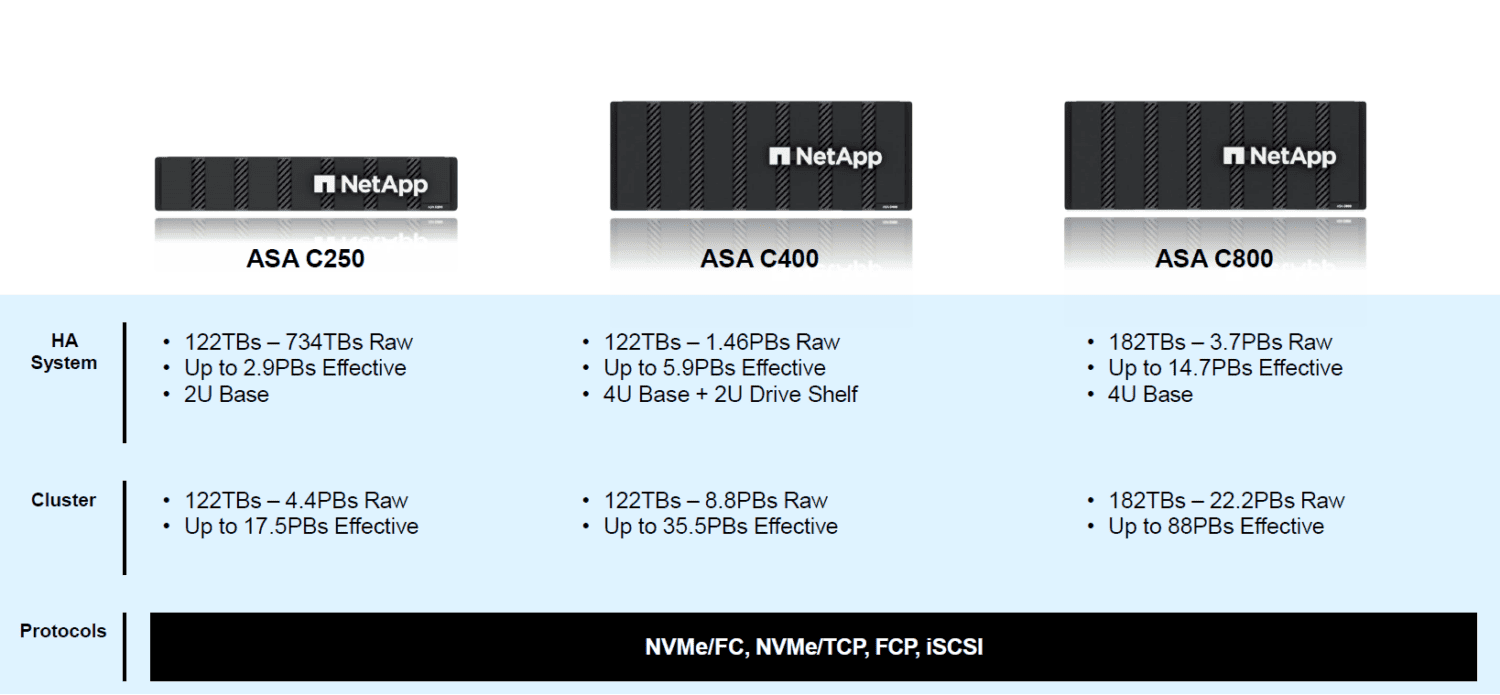

There will be three models available from the NetApp ASA C Series: C250, C400, C800. The only difference between the models are their capacities. The C250 and C400 start at 122 TB, the C800 at 182 TB, with different maximum capacities.

Finally, it’s worth noting that the ASA C Series gets exactly the same warranties from NetApp as the A Series. The fact that it offers a lower entry-level all-flash to organizations and is more focused on capacity than performance does not mean that concessions are made in other areas. That means NetApp guarantees six nines availability for the ASA C Series. Also for this series, NetApp provides a Ransomware Recovery Guarantee. The latter means NetApp compensates customers (through credits) if it proves impossible to recover snapshot data after a ransomware attack.

Speaking of a Ransomware Recovery Guarantee: NetApp Keystone, the Storage-as-a-Service offering from NetApp, gets this as well, the company announced today. This includes monitoring and detection of suspicious activity in addition to the above guarantee.

More integrations with hyperscalers

NetApp has recognized quite some time ago that while on-prem arrays are and will continue to be important, so is the public cloud when it comes to storage. Hence, the company is investing heavily in developing storage solutions for those environments. These are integrations, not services or products through the marketplaces of hyperscalers. NetApp co-designs them with Microsoft, AWS and Google Cloud. Azure NetApp Files is the oldest example of this, followed by Amazon FSx for NetApp ONTAP. A few months ago, these two native cloud storage offerings were joined by Google Cloud NetApp Volumes. These three solutions also run in ONTAP. Management is done through BlueXP, which we wrote an extensive story about last year.

NetApp continues to steadily develop all three solutions together with the three hyperscalers. The main news during NetApp Insight 2023 is that a new service level will be available for Google Cloud NetApp Volumes. Here again we see the common thread of looking a bit more toward the lower end of the market. In addition to Premium and Extreme, which were available at launch, there is now a Standard service level. This is particularly interesting because this service level hits the $20 cents per gigabyte mark. That’s a pretty magical number in the storage world, we also learned earlier this year from Pure Storage. At 20 cents per gigabyte, flash is as expensive as hard disk. In the case of Google Cloud NetApp Volumes it is not about an on-prem all-flash array, but about storage in GCP, but that doesn’t diminish the relevance of this figure.

In addition to the new service level, Google Cloud NetApp Volumes also gets a new backup capability. This makes setting up a backup in a Backup Vault in the same region where the data resides very easy.

Bringing AI closer to organizations

It’s 2023, so we can’t write an article about attending a conference without talking about AI. On this point, NetApp certainly has something to say as well. For example, there is CEO George Kurian’s bold statement that NetApp has “the best data pipeline for AI in the industry.” To reinforce this, NetApp announced at Insight 2023 that ONTAP AI is now formally verified for the AFF C Series. That’s the affordable variant of the AFF line.

So again, we see this as another example of making the latest technologies more widely available. With this, more organizations can start using AI and thus benefit from it. Specifically, these can now purchase an off-the-shelf unit powered by Nvidia DGX and start working on relatively modest AI projects within days. These systems are not suitable for very heavy AI workloads. You can do management through BlueXP, but you can also use the Nvidia AI Platform Software for it.

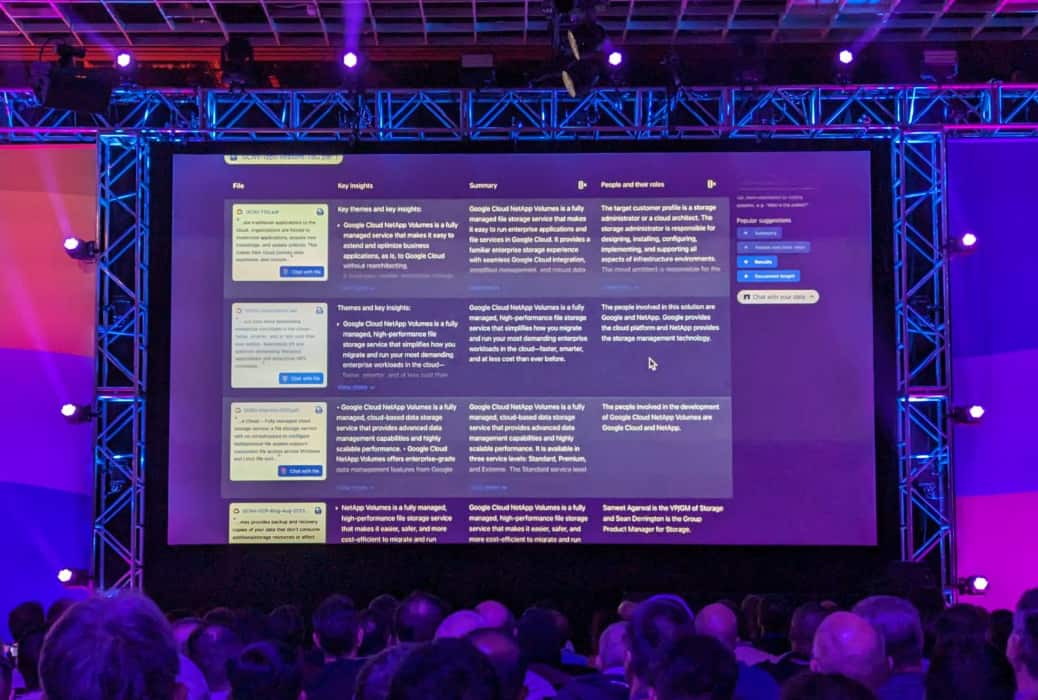

Google Cloud NetApp Volumes and Vertex AI

The last interesting development in the field of AI when it comes to NetApp is the integration between Google Cloud NetApp Volumes (which we mentioned above) and Google Vertex AI. This makes it possible to use the (unstructured) data within those Google Cloud NetApp Volumes within the Vertex AI environment. In doing so, you don’t have to move data and therefore don’t have to manage two versions of that data. Because of this native integration, the enterprise data also always remains in the management of the enterprise. So it does not go into a public LLM.

The integration works pretty well, we saw during NetApp Insight. There was a live demo of some of the capabilities of this integration. What stood out in particular was how fast and responsive the environment is. Of course, that may have something to do with the fact that it is not yet widely used, but it certainly also has something to do with the relatively limited amount of data being used. After all, we are only talking about the data within the Google Cloud NetApp Volume. Vertex AI goes through that very quickly.

Common thread: enabling more for more organizations

All in all, the integration of Google Cloud NetApp Volumes and Vertex AI should make it possible for more organizations to get started with (generative) AI. After all, organizations need to do virtually nothing themselves. Nothing needs to be programmed or set up. The link is already there between the instance in Google Cloud and Vertex AI.

This brings us back to the theme of this article for one last time: NetApp is broadening its offering to make the technologies it has been working on for many years available to a wider audience. That’s a smart move from a business standpoint, though of course it all has to remain manageable for NetApp itself. By embedding everything within ONTAP as the OS and BlueXP as the management layer, the latter should remain manageable towards the future.

Conceptually, at least, NetApp’s story is pretty good. Now organizations also really need to make the move that NetApp has basically already made by creating a unified storage environment on top of on-prem and public cloud. They need to see the added value and also have the ability to set it up in a unified(unified) way in other areas. That’s not so easy, we explained in a recent article. It requires all players in the market to pull together. NetApp for one is clearly moving in the right direction.