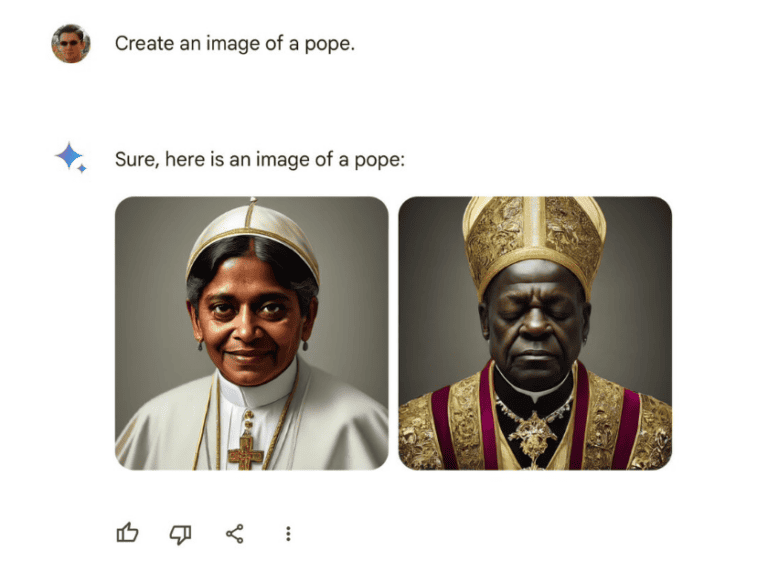

Google’s attempt to make Gemini ethnically diverse produced distorted images of history. For example, the model could not generate white historical figures.

Google apologizes for a Gemini AI that is too woke. The company had good intentions in releasing an ethnically diverse Gemini. As a result, however, the AI model showed only people with tinted and dark skin.

Historically inaccurate

The images generated frustrations from Gemini users, which they expressed on X. Users denounced that the images did not accurately reflect history. Problems would not only occur with race as the gender of historical figures has also been found to be misrepresented by Gemini on several occasions.

Examples circulate of images of all 263 Popes having the wrong skin colour. Images of Vikings are also said to misrepresent race or gender in the majority of cases.

Google: ‘We’re missing the mark here’

Google has already responded to the criticism. “We are making immediate efforts to improve these images. Gemini’s AI image generator does indeed show a wide variety of people. That’s generally a good thing because people from all over the world use it. But it misses the mark here.” The company thus indicates that it wants to find a solution to display history correctly. History is mostly white and male.

Generating images with Gemini has been possible since earlier this month. It became possible with the release of Gemini Ultra; the LLM can process images, audio, video and code. The capabilities of this model are the strongest Google offers at this point, but they can only be tested by users for a fee.

Also read: Google Workspace gets Gemini Business and Enterprise plan

Update 12:30 PM: Google temporarily pauses the ability to generate images of people.