Salesforce has developed a new way to reduce gender bias in word embedding in collaboration with researchers from the University of Virginia. Word embeddings are used to train AI models to summarize, translate, and perform other prediction tasks.

Word embeddings capture semantic and syntactic meanings of words and relationships with other words, which is why they’re commonly employed in Natural Language Processing (NLP). But they are also criticised for linking gender prejudices to previously gender-neutral words. For example, the words ‘brilliant’ and ‘genius’ are by definition gender-neutral, but they are linked to ‘he’, while ‘sewing’ and ‘dance’ are linked to ‘she’.

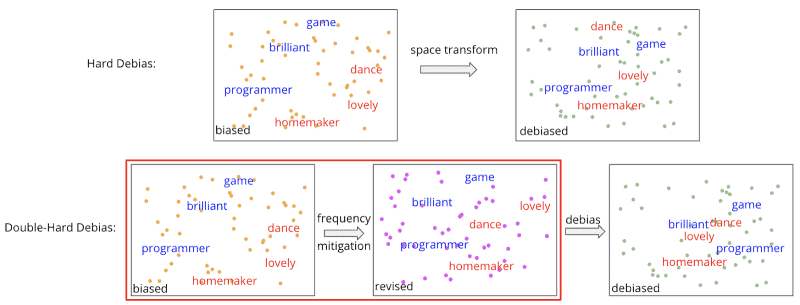

Double-Hard Debias

Double-Hard Debias transforms the embedding space into a form of genderless subspace. The gender component is then projected along this dimension in order to produce revised embeddings. An anti-bias operation is then performed to achieve the final result. To assess these results, the researchers tested the results on the WinoBias dataset, which consists of pro-gender-stereotype and anti-gender-stereotype sentences.

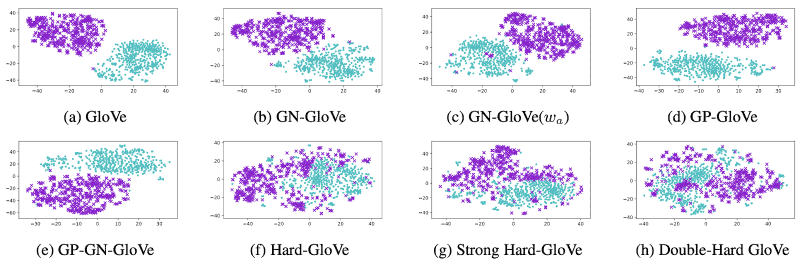

The performance gaps reflect how an algorithm system performs on the two groups and eventually leads to a gender bias score. The researchers used the GloVe algorithm to calculate the bias score, and the score dropped from 15 to 7.7 while the semantic information remained intact. The results are also more evenly mixed than other scenarios on visualisations (tSNE projections) that visualise embedding.

Bias will always exist

There are plenty of experts who believe that word embeddings will never be completely free of bias. In a recent meta-analysis by the Technical University of Munich, contributors claim that naturally occurring neutral text simply does not exist, because the semantic content of words is always intrinsically linked to the social and political context of a society.