A team at IBM Research has developed a mixed-signal analog chip suitable for AI workloads. The project is still in the research phase, but it is looking promising. While generative AI currently eats up huge hardware requirements and power consumption, an alternative seems to be emerging – but nobody knows when it’s ready for primetime.

The concept of “analog in-memory computing” works in part like a biological brain. Communication between our neurons is regulated by the strength of each synapse, which adjusts dynamically and is practically impossible to capture in digital signals. With the IBM chip, this is also how it fundamentally works, with phase-change memory (PCM) forming the basis at the hardware level as the counterpart to our biological building blocks. With this technology, it is possible to do AI calculations a whole lot more efficiently, specifically multiply-accumulate operations (MAC-ops).

Accurate and efficient

To achieve this, the IBM researchers had to achieve a similar accuracy that we see in the most advanced digital chips, that is, with 14 nanometers as the smallest size. According to IBM, the analog chip is particularly promising because it would be much more energy efficient than, say, GPUs for AI workloads. It also bypasses some of the usual bottlenecks faced by classic chips. For example, All About Circuits names the limits of the Von Neumann architecture, which slows down computations due to latency between the processor and memory (including the cache that sits on a chip).

In this regard, at least, there is much to gain: even Nvidia’s very latest AI-capable GPUs chug power and will incur costs even large tech companies will suffer sleepless nights from. Highly complex large language models such as GPT-4 require a hardware infrastructure that is constantly challenged. This makes such models completely unsuitable for running locally: compact LLMs with high-quality data sets are the way to go for on-prem deployment.

Similarities with ‘classic’ chips

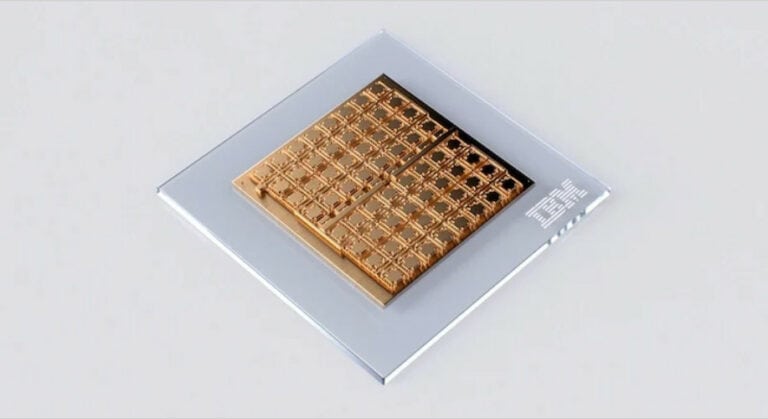

Although analog technology differs from conventional chips on many fronts, there are also similarities. For example, IBM has chosen to aggregate 64 “tiles” on an integrated chip. This is reminiscent of the scaling possible with current computer architectures from Intel, Nvidia and AMD, among others.

In addition, a step toward digital is still needed to process the calculations: each tile contains an integrated analog-to-digital converter. In the end, however, what matters are the end results. Those were already impressive: on the CIFAR-10 dataset that tests AI at distinguishing images, the chip scored 92.81 percent. That’s higher than any previous result. Still, it will be years before such a chip can be produced on a large scale. As with quantum technology, it remains to be seen when this will be possible.

Also read: IBM customers can get their hands on Meta’s LLaMA 2 model