Update 20/12/2024 – With the approval of the European Commission, an obstacle has been removed for Nvidia, which faced additional investigation in our region.

The EU focused its investigation on the possible control that Nvidia could gain on the GPU market, which could harm competition. The EU now concludes that acquiring Run:ai does not raise competition concerns. “Our market investigation confirmed to us that other software options compatible with Nvidia’s hardware will remain available in the market,” said European Commissioner for Competition Teresa Ribera.

Update 01/11/2024 – Nvidia’s planned acquisition of Run:ai is now on shaky ground. In Europe, the chipmaker is facing additional approval requests due to concerns about potential reduced competition in the market.

Nvidia may need to make concessions to successfully finalize the deal. Run:ai enables developers to manage and optimize their AI infrastructure. However, regulators are becoming increasingly strict about acquisitions by large tech companies, fearing that startups are being acquired to eliminate potential competitors.

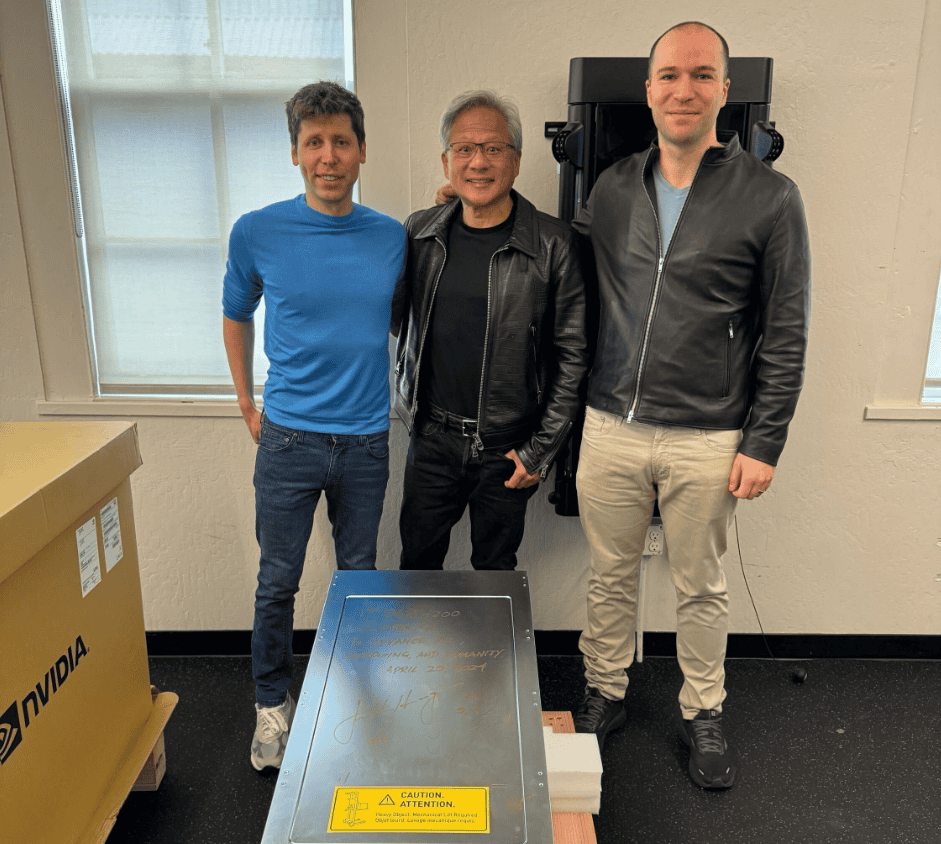

Original – Nvidia is to acquire Israeli startup Run:ai for $700 million. With this acquisition, the GPU maker hopes to simplify hardware management. Meanwhile, Nvidia CEO Jensen Huang personally delivered the first DGX H200 system to OpenAI.

Run:ai has been working with Nvidia since 2020, helping organizations make the most of their infrastructure. For AI tasks and other High Performance Computing (HPC) workloads, it is important to split up the (very pricey) hardware as sensibly as possible. This can manifest itself as subdividing between different departments, prioritizing specific tasks or monitoring actual usage.

Customers of Run:ai can expect little turbulence: Nvidia will continue to offer the same offerings within its own platform. Since the vast majority of data centers deploy Nvidia hardware, the move to this company’s software suite is a quick one.

Tip: Intel and Nvidia have radically different visions for AI development

Next step

Nvidia isn’t too fervent with acquisitions, but the ones that it does opt for are often meaningful. On the software front, the incorporated companies naturally focus on AI development, integrating seamlessly with Nvidia’s existing AI Enterprise suite. However, the acquisition of Mellanox was the most meaningful, as its blazing-fast InfiniBand connectivity is the basis for the extreme scalability AI systems Nvidia sells today.

The acquisition will still require approval from competition watchdogs worldwide, however. This previously prevented Nvidia’s acquisition of chip designer Arm, although that would have meant a major market shift – that isn’t the case with Run:ai.

In the here and now, the Hopper architecture is the fastest option within Nvidia’s hardware offerings. In the future, Blackwell will follow, which was announced with much fanfare in March. An evolved form of the current hardware is in the DGX H200, which has just been delivered to a customer for the first time. OpenAI was the first recipient, with CEO Sam Altman and president Greg Brockman accompanying Jensen Huang to welcome the device. The message on the DGX H200 reads “to advance AI, computing and humanity.”

That OpenAI is the first customer would not just be a publicity stunt. Huang argues that all buyers have a fair shot at hardware. Demand for AI chips has been skyrocketing for over a year, with Dell specifically having to tell customers in late 2023 to wait 39 weeks for their orders. Incidentally, Nvidia itself let it be known that the H200 is not yet available for immediate purchase.

If video memory is the bottleneck for deliveries, the H200 only makes the problem worse. While H100 chips come equipped with 80GB of HBM3, H200s contain 141GB of significantly faster HBM3e modules.

Also read: Intel Gaudi 3: the most powerful AI chip for impatient Nvidia customers