Meta, Facebook’s parent company, is building a supercomputer environment for the Metaverse.

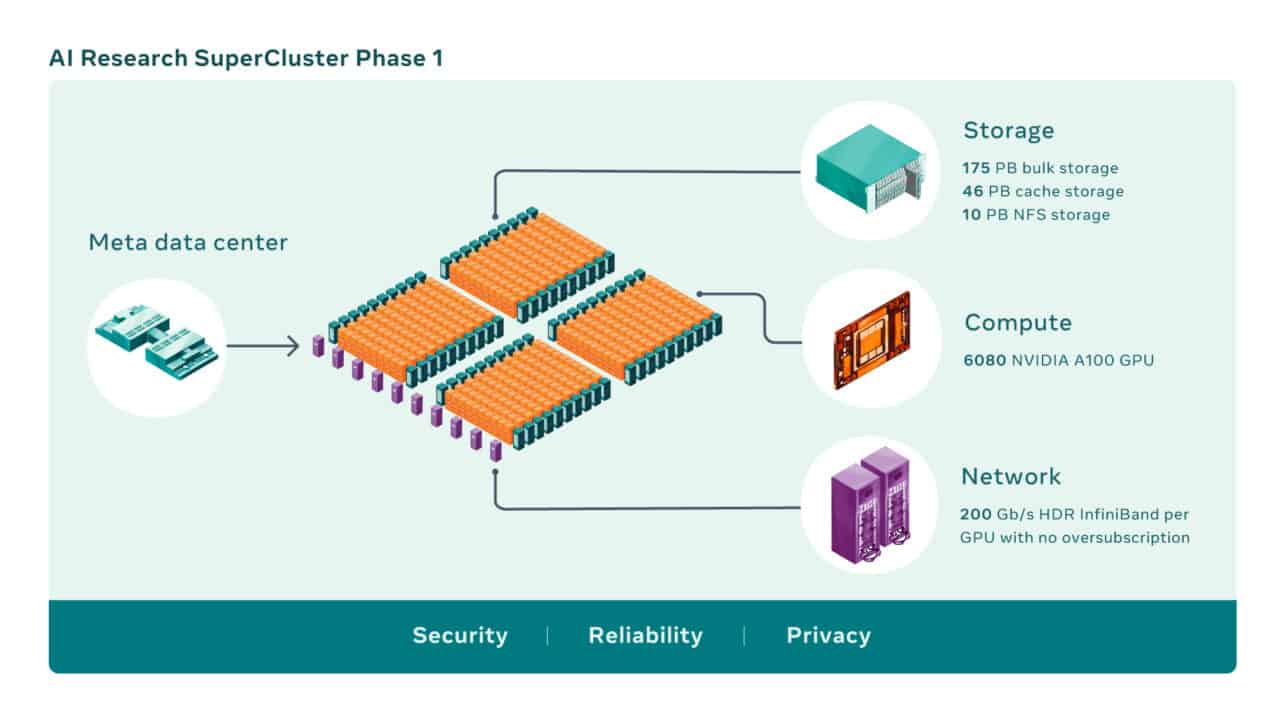

The so-called AI Research SuperCluster (RSC) consists of 6,080 Nvidia A100 GPUs in 760 Nvidia DGX A100 systems. The GPUs are combined through DGX nodes and connected via an Nvidia Quantum 200 Gbps network fabric.

Furthermore, the RSC supercomputer features 175 PB of storage based on Pure Storage FlashArray’s and 46 PB cache storage based on Penguin Computing’s Altus systems.

AI solutions

The RSC supercomputer plays a major role in Meta’s AI systems development. Meta focuses on AI solutions for real-time voice translation of large, multi-lingual groups of people. In this way, Meta hopes to deliver technology for global collaboration without language barriers.

Furthermore, according to Meta’s researchers, the RSC plays a major role in developing next-generation computer-based platforms. The researchers hint at the development of the Metaverse environment that Meta eagerly works towards.

Faster training of AI models

Although the RSC supercomputer is already being used to train AI modes, it remains under construction. According to Meta, exploiting self-supervised learning and transformer-based models requires more complex AI models and more data. Meta’s RSC is being constructed to handle the requirements.

Initial test results show that, compared to legacy computing power capabilities and applications, the RSC achieves much higher performance. Its speed allegedly allows models based on tens of billions of parameters to be trained entirely in three weeks instead of nine.

Second phase

Meta will steadily expand the capacity of the RSC this year. By the middle of 2022, the supercomputer should have 16,000 GPUs providing a total computing power of 5 exaflops. The Infiniband network connecting all GPUs and nodes should eventually feature 16,000 ports. Meta hopes to achieve storage speeds of 16 Tbps and exascale capacity to handle the high demand for storage space.