Nvidia announced a range of new solutions for AI functionality in data center environments. Key introductions include the new Grace CPU Superchip processor and the Nvidia H100 GPU.

The Nvidia Grace CPU Superchip processor is the key introduction for AI functionality in data center environments. This Arm-based Neoverse processor specifically lends itself to AI and high-performance computing in data center environments. The processor should offer twice the memory bandwidth (up to 1 Tbps) of current server processors.

Nvidia Grace Superchip CPU

The Nvidia Grace CPU Superchip processor consists of two CPU processors connected via NVLink-C2C. NVLink-C2C is a new chip-to-chip interconnect technology with high speeds and low latency. The processor is based on Arm’s v9 technology, featuring 144 Arm cores in a single socket. In addition, the processor features LPDDDR5x memory with Error Correction Code to balance between speed and power consumption.

For high-performance HPC and AI workloads, the Nvidia Grace Superchip complements the Grace Hopper SuperChip CPU-GPU module released last year. The Nvidia Grace Super Chip is compatible with all Nvidia software stacks.

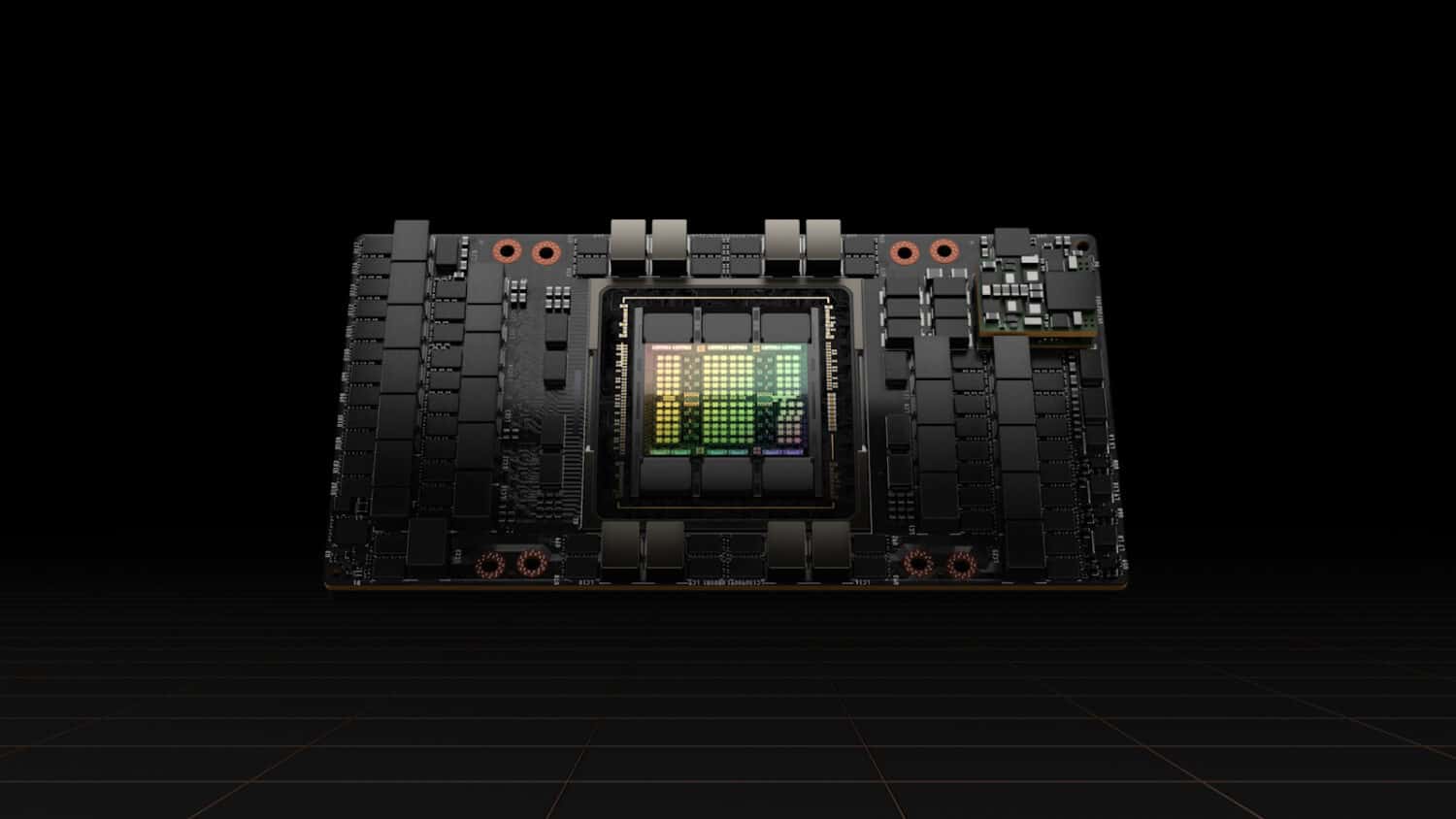

Nvidia H100 GPUs for expanded AI functionality.

In addition to the Grace CPU Superchip processor, Nvidia introduced the Nvidia H100, a Hopper technology-based GPU. Among other things, Hopper technology is useful for scaling and securing HPC workloads in data centers.

The Nvidia H100 GPU consists of no less than 80 billion transistors. Furthermore, the release features various technologies that make the GPU especially suitable for advanced AI model training, deep recommender systems, genetic model composition and digital twin environments.

Form factors

The Nvidia H100 GPUs are available in SXM and PCIe form factors. This allows a wide range of server requirements. The GPUs can be used in any data center infrastructure, be it on-premises, hybrid cloud or multi-cloud. Furthermore, these GPUs are integrated into the recently introduced Nvidia DGX H100 AI infrastructure platform. This platform consists of eight Nvidia H100 GPUs capable of delivering up to 32 petaflops of AI performance.