HPE presented a new compute offload technology based on the Message Passing Interface (MPI) protocol for its Slingshot Network Interface Cards (NICs). The method allows control planes for both computation and communication to be managed on the NIC itself.

Moving workloads on the control plane often delivers cost savings and stronger security for modern system infrastructures. However, the constant moving of workloads means that offloading computations in one location results in more computations elsewhere.

New technology

HPE managed to offload calculations while moving workloads with its new Cassini Slingshot 11 NIC, which works in conjunction with the Rosetta Slingshot Ethernet switch ASIC.

The calculations include control plane management calculations for computational power and communications. The tech giant uses MPI for this purpose. This protocol is generally used for distributed HPC applications and moving AI training frameworks from the processor to the GPU. MPI rarely offloads to the NIC itself.

HP’s new MPI technology should change this. It’s called ‘stream triggered communication’ and applied at the Frontier exascale suprecomputer located in the Oak Ridge National Laborations research center, a US government site. The MPI technology for offloading computations differs from traditional MPI communication. The latter is GPU aware, while the new MPI technology is ‘GPU stream aware’.

Traditional MPI technology.

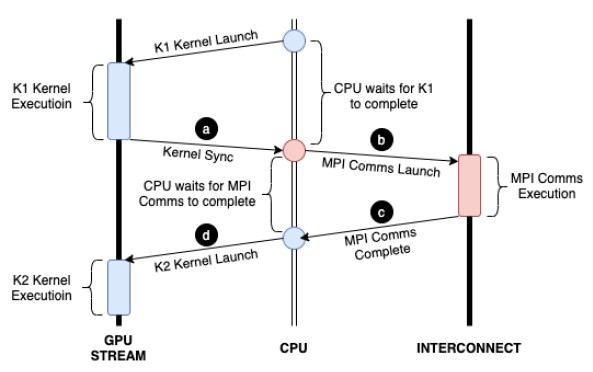

Typical GPU-aware MPI software, which resides in Nvidia’s and AMD’s GPU nodes, has its own mechanisms for performing peer-to-peer communication between GPUs residing in different nodes. The method is useful for large-scale simulations and AI model training, among other things.

The MPI data moves via Remote Direct Memory Access methods. This allows data transfers between a GPU and a NIC without the CPU intervening. However, the process still requires CPU threads to synchronize activities and orchestrate the movement of data between compute engines. All communications and synchronizations are therefore done at the GPU kernel level.

Stream triggered communication

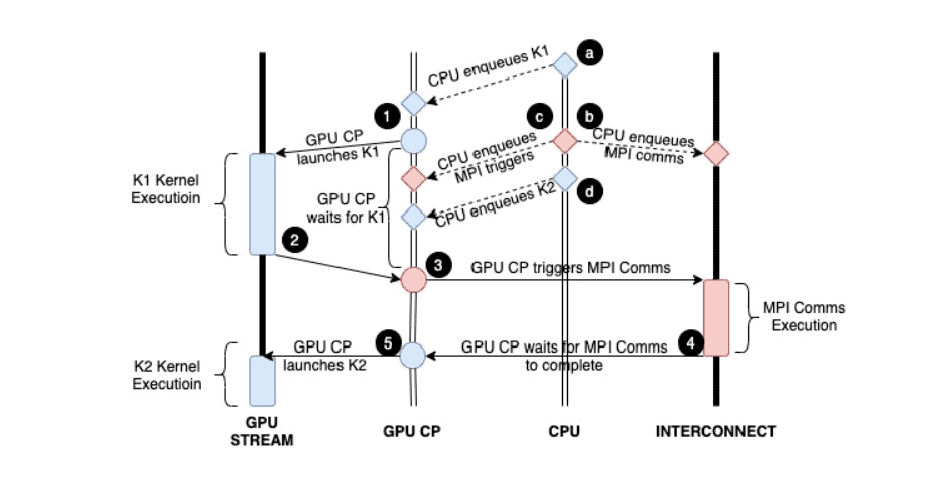

With HPE’s stream-triggered technology, the GPU kernel activities are queued and divided into concurrent streams. The stream from the GPU kernel is packaged into command descriptors, which ensures these activities can be triggered at any given time. In doing so, the control activities are added to the GPU stream and executed by the GPU control processor instead of the CPU.

Ultimately, the technology should allow faster and larger-scale offload calculations. HPE aims to achieve better performance for future interfaces. The development of optimized MPI technology is an important step.

Tip: Europe’s first exascale supercomputers are one step closer