Google’s progress in artificial intelligence has been attracting the attention of international media for years. With the release of the PaLM API, the tech giant made its large language model available to developers. Google’s chatbot Bard will now also be relying on PaLM. But what exactly is it? And how does it compare to GPT-4, the algorithm behind OpenAI’s AI chatbot that has become a global sensation?

If we go back in the history of PaLM, we first find some precursors. In 2019, Google presented the integration of the BERT algorithm into Google Search. Currently, BERT processes almost all English-language search results and is also active in 70 other languages. The model is able to predict queries and correct missing or misspelled words with great accuracy, in part because it takes into account the context in which words are used.

Since BERT’s launch, the hype surrounding AI has only been growing. OpenAI, which has strong ties to Microsoft, roused the tech industry with the introduction of ChatGPT. Not much later, Google released a potential competitor to ChatGPT: Google Bard. Conversations with these chatbots range from profound to nonsensical and depend heavily on the topic and type of questions asked by the user. Whereas ChatGPT relied on OpenAI’s GPT model, Google Bard initially used the self-developed LaMDA. According to Google CEO Sundar Pichai, the relationship between Bard and ChatGPT was that of a “Honda Civic and a fast sports car.”

GPT-4 and PaLM

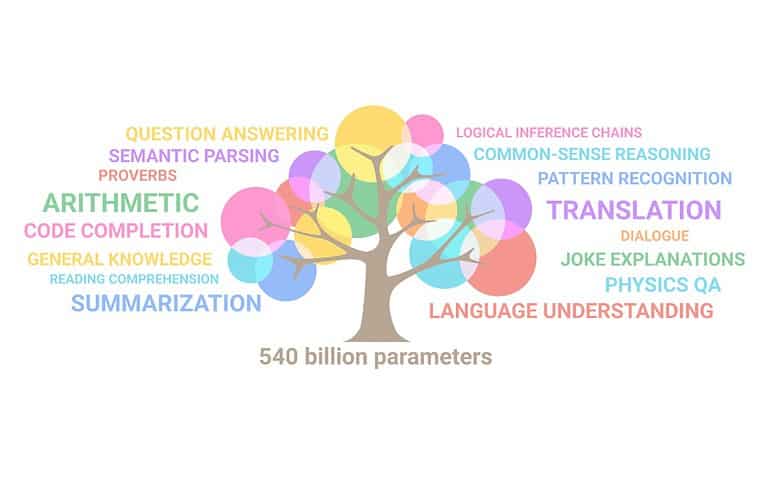

OpenAI has not been twiddling its thumbs, however. Recently, GPT’s newest model, GPT-4, was released. GPT-4 is another solid leap forward within the world of generative AI. Google Bard also received a major update earlier this week, with the implementation of PaLM (Pathways Language Model). It is a model consisting of 540 billion parameters and Google has trained it on the most extensive dataset the tech giant has available. OpenAI no longer discloses the number of parameters of its model since introducing GPT-4. The number is said to be a trillion, but there is no mention of this in the official documentation. So it is difficult to compare the complexity of the two models in that area.

Both GPT-4 and PaLM are LLMs: large language models. Simply put, such models can generate, structure, summarize, translate or otherwise process text and other media based on its parameters. The development of an LLM is largely about tuning and augmenting the variables. In addition, the data on which the model is trained is crucial. It is enormously time-consuming to prepare and process this data set, to the tune of 50 days or more.

Divergent goals

An asset PaLM has, is that it uses Google’s own hardware. The company is quite boastful about its capabilities. Thousands of linked TPUs (Tensor Processing Units) allow for the level of scale that characterizes PaLM over Google’s own previous models. According to the company, a single comprehensive AI model is a more efficient solution than a variety of smaller dedicated AI applications. One end result is that PaLM achieves impressive results on school assignments, for example. The model is able to solve math problems intended for 9- to 12-year-olds with a success rate of 58 percent, not far from the 60 percent that schoolchildren score on average.

A fundamental difference between GPT-4 and PaLM is that only PaLM has an API available to developers. ChatGPT, for that matter, offers plugins, which isn’t the same level of access and applicability. Therefore, OpenAI’s product is ironically not open-access whereas PaLM is. It possibly shows the divergent goals between the two models. Where Google seems to want to enable all kinds of applications of this form of AI, GPT-4 is a closed system. Still, we should not overstate this. Due to the impressive hardware requirements, very few developers have the capabilities to run large-scale solutions based on PaLM.

PR-wise, chatbots like Bard and ChatGPT are interesting, but it’s easy to overestimate its capabilities. Ultimately, a chatbot does not “think,” but merely predicts what words the underlying model should serve up. AI expert Gary Marcus stated during a podcast interview with The Economist that an LLM is very likely not going to be the end station for AI. “It only has knowledge about the ratio and succession of words,” he said.

Also read: The jobs most at risk to generative AI like ChatGPT

Still potential

In conclusion: PaLM has more in common with BERT than it initially appears. Still, there are examples of promising applications of LLMs. For example, Google has introduced Med-PaLM, which applies the AI model to provide medical advice. Research shows that Med-PaLM is an improvement over previous AI applications. However, a real doctor is still better at diagnosing a patient. A comprehensive medical application would be groundbreaking, but is thus not yet feasible. Might PaLM be better suited as an assistant?

When Google introduced BERT, it was hard to imagine how quickly AI’s capabilities would expand. We have reached the stage where people like Elon Musk and Steve Wozniak are sounding the alarm. In the AI arms race, Google CEO Sundar Pichai does well to downplay Bard’s capabilities. GPT-4’s black box leaves prominent tech figures wondering if strict regulation around AI is not necessary. Positioning AI as simply a convenient inspiration and assistant may therefore be the most realistic as well as politically viable tactic.