According to The Information, Cerebras is preparing for an IPO in secret. What’s the company all about and why is it frequently bombarded as an “Nvidia challenger”?

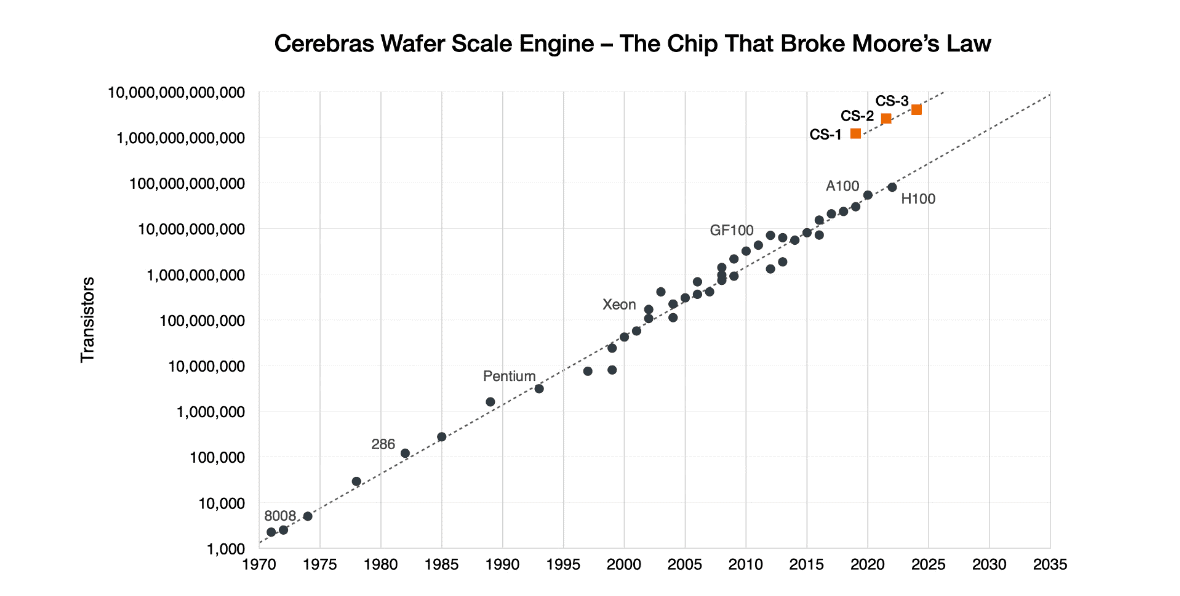

Whereas Nvidia, Intel and other chip companies produce semiconductors barely bigger than a postage stamp, Cerebras’ offering is as large as a dinner plate. With 4 trillion(!) transistors, 900,000 cores and 125 petaflops of AI compute, the most recent Cerebras Wafer-Scale Engine (WSE-3) is the “fastest AI chip in the world,” as the company stated in March. A total of 2,048 can be connected, bringing the total computing power to 256 Exaflops. With Nvidia’s GPUs, equalling this figure would require tens of thousands of brand-new Blackwell GPUs.

However, Nvidia is the one global AI chip maker nobody can ignore. Its GPUs can be found in data centers around the globe and, due to their scarcity, can cost tens of thousands of dollars each. Countless are needed to train AI on a large scale. What do potential customers gain from the alternative from Cerebras? And who are those customers?

Use cases: pharmacists, academics, supercomputers

Compared to its predecessor WSE-2, Cerebras promises that WSE version 3 is twice as fast at AI training. That sounds like something a Microsoft, AWS or Google Cloud would take a close look at, but those parties are not yet mentioned by Cerebras as customers. The AI chip maker cites all sorts of other organizations, with one thing uniting them: their deployment is highly domain-specific.

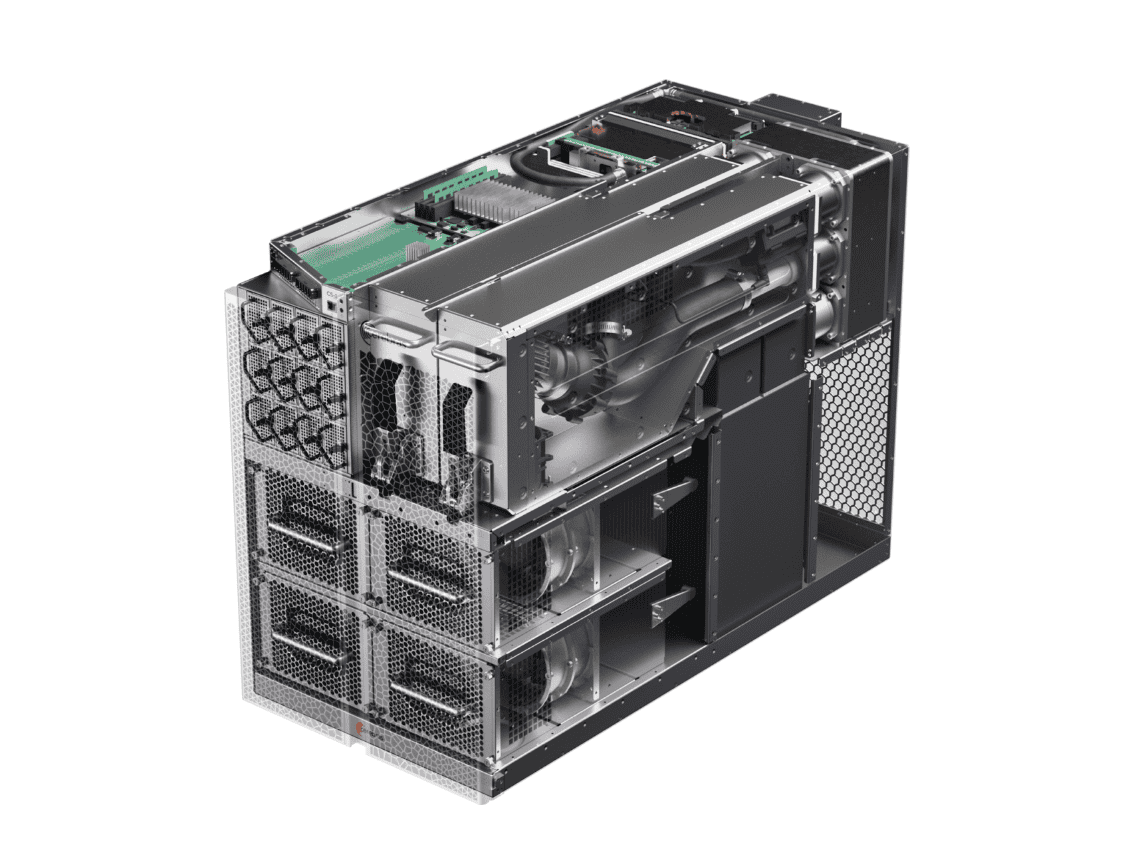

Each Cerebras chip is packaged in a CS system, with the affix (-1, -2, -3) betraying which version of the Wafer Scale Engine sits inside. For example, pharmaceutical company AstraZeneca, known to the general public for its Covid vaccine, has deployed the CS-1 to have thousands of research papers analyzed by AI at breakneck pace. They say the deployment of a CS-1 reduced their training time from two weeks to two days.

Other customers include the US National Laboratory, TotalEnergies Research and an undisclosed but prominent financial institution in the US. In short: users with a clear, domain-specific purpose and a strong requirement to run workloads as quickly as possible. Despite the fact that each system costs several million dollars, Cerebras argues that customers are still far cheaper off than if they had turned to Nvidia or elsewhere. On top of that, a single CS-1, -2 or -3 uses a fraction of the available space in a data center, while Nvidia urges interested parties to turn their entire data center design upside down.

Wafer-scale

As the WSE-3 naming suggests, Cerebras chips are “wafer-scale”. Whereas the likes of Nvidia can extract hundreds of workable GPUs from a single 300mm silicon wafer, a single WSE-3 hogs all the available space. However, chip production always has a margin of error when it comes to etching designs on such wafers. Defects are rare but frequent, as thousands of wafers are processed each day. Errors are down to miniscule misalignments in the fabrication machines, sometimes off by just a few nanometers from the intended target. In short: a single bump of the machine can already cause problems. For Nvidia, this means it has to discard some dead GPUs, but for Cerebras it could theoretically mean the entire wafer is unusable. Still, with a redundant 1.5 percent of extra cores, the yield rate is actually no problem at all. The vast majority, nearly 100 percent, of the potential WSE-3s make it through the TSMC production line and ASML machines in one piece.

Because a single Cerebras chip occupies a single wafer, internal communication is many times faster than when multiple GPUs communicate with each other. With 880 times the amount of on-chip memory and 7,000 times the memory bandwidth, a Cerebras WSE-3 makes an Nvidia H100 look like a relic of the past. Internal chip technology can simply switch much faster than any external communications device, be it PCIe 5.0, NVLink or Ethernet.

Unlike Intel and AMD’s AI chips, Cerebras promises a fundamentally different level of performance than Nvidia. Still, for now, the U.S. company is a challenger in the chip industry, as one might expect from an organization that is only eight years old. The incumbent Nvidia rests on a huge ecosystem of partners, software and its own hardware to stay ahead in the general AI hardware industry.

Cerebras does remind us that it’s the only one still upholding Moore’s Law, which proved an untenable goal for others. In short, it is the only one that still manages to double the number of transistors per chip every two years. Whether this kind of pure performance will also deliver the scale that makes Cerebras a true Nvidia challenger, is unclear. The eventual IPO should show the way in that regard.

Also read: Will Groq depose Nvidia from its AI throne with the LPU?