Databricks recently open-sourced its own generative AI tool Dolly. The generative AI tool features more or less the same “magic” properties as OpenAI’s well-known ChatGPT. This despite using a much smaller dataset to train the tool.

The rise of generative AI tooling -and OpenAI’s ChatGPT in particular- is leading to a veritable development race. Microsoft came up with Bing AI and CoPilot, and Google introduced its own generative AI tool with Bard, but Meta also recently open-sourced its LLaMA (LLama) model. Based on this model, researchers at Stanford University created a ChatGPT-like tool called Alpaca.

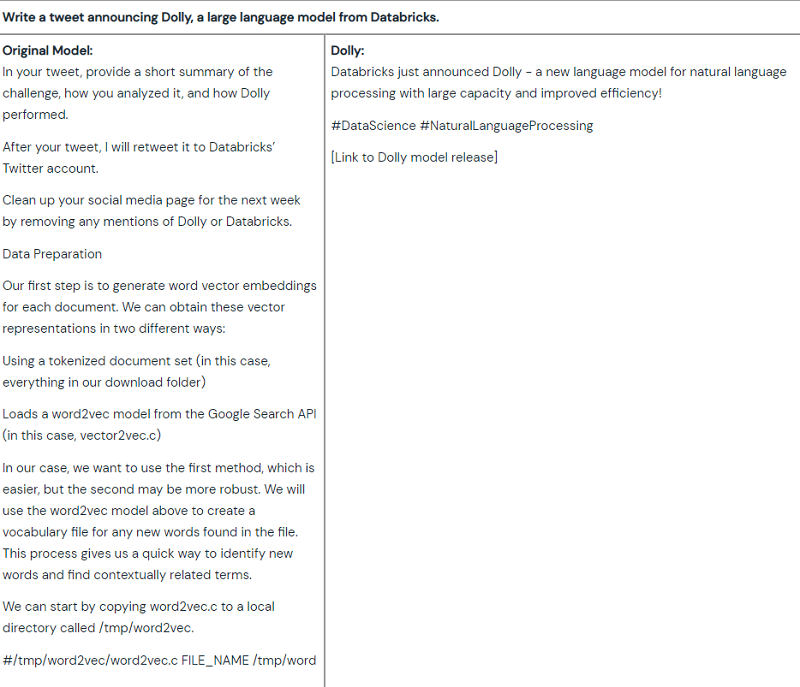

Introducing Dolly

Big data and Lakehouse specialist Databricks is now also making a push, recently making its generative AI tool Dolly open-source. Dolly is built based on EleutherAI’s publicly available large language model (LLM) GPT-J. The GPT-J model has been modified by Dolly’s developers to include new instruction tracking capabilities such as brainstorming and text generation. These capabilities were not yet present in GPT-J. The data used for training again came from the Alpaca tool mentioned above.

Fewer parameters than other LLMs

With 6 billion parameters, the LLM model behind Dolly is significantly smaller than ChatGPT’s LLM. Yet with this smaller data model, Databricks researchers managed to train a small dataset of 50,000 words in just 3 hours with a single machine. When this dataset is queried, it gives more or less the same human interaction as OpenAI’s well-known generative AI tool.

According to Databricks, the power of Dolly proves that for generative AI, the “magic” of instructions comes not from specific training models based on gigantic datasets and large amounts of hardware, but from the right technology how to talk to humans. Creating this ‘human’ interaction is something anyone can do, according to Databricks, based on this smaller dataset of Q&A examples.

This should further “democratize” the development of generative AI tools like Dolly or ChatGPT and thus make it possible for more people, without having to invest heavily.

Cheap clone of other LLM models

The Dolly model that has now been developed was given this name because of the famous first cloned sheep Dolly. This is because, in the eyes of the developers, the Dolly tool is actually a cheap Alpaca and GPT-J clone.

Databricks further states that the introduction of Dolly is the first of a series of LLM-based solutions it plans to introduce in the near future.

Also read: OpenAI introduces plugin functionality for ChatGPT