Microsoft today released the first version of a new series of super-small AI models: Phi-3 Mini. This so-called small language model (SLM) is small enough to easily run on a smartphone.

The introduction of Phi-3 Mini follows a few months after Phi-2, which already contained relatively few parameters. Despite its small size, this model still performed well in AI benchmarks. The new Phi-3 Mini has 3.8 billion parameters and is trained on a very compact data set. Meta’s open-source Llama models, widely used by AI developers, consist of at least 7 billion parameters.

Functionality and performance

Phi-3 Mini is a lot better at programming and reasoning than previous SLMs Phi-1 and Phi-2, according to Microsoft. Those two models excelled mainly in writing code (Phi-1) or explaining how it reached a certain conclusion (Phi-2). Phi-3 combines these skills, and also performs them better than its two predecessors.

No miracles should be expected from Phi-3 Mini. For example, it cannot match the performance of OpenAI’s industry-leading GPT-4. However, Microsoft’s SLM is said to deliver performance with outputs equal to LLMs that are up to 10 times larger. Cramming larger models within a small memory budget can also be done by a resizing process called quantization, but this significantly lowers accuracy. The alternative is simply a smaller model, and that doesn’t seem to benefit Microsoft.

Phi-3 Mini is trained based on a “training plan”. This plan is inspired by how children learn from bedtime stories. This involves books with relatively simple words and sentence structures that talk about a variety of topics. Other LLMs were consulted to create ‘children’s books’ using only a list of three thousand words. This is what Phi-3 Mini was then trained with, indicates Microsoft’s Corporate Vice President Azure AI Platform in a comment to The Verge.

Applications for Phi-3 Mini

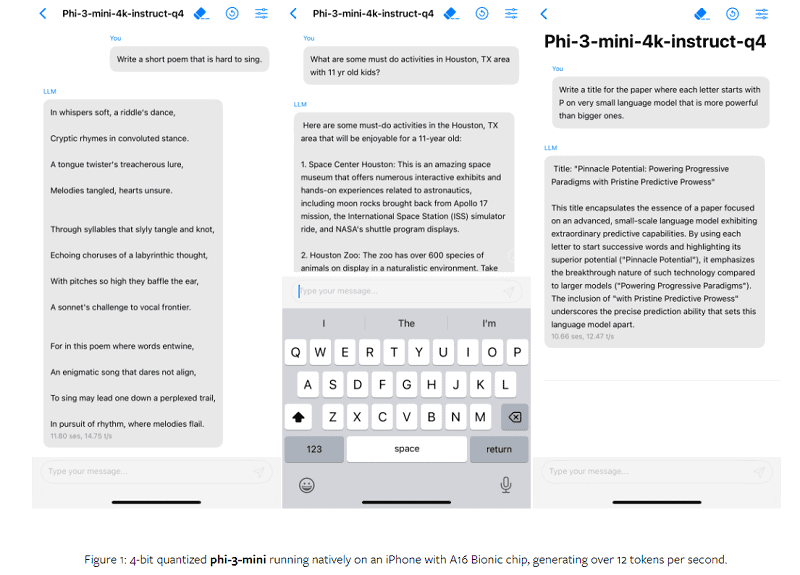

Microsoft’s new AI model is especially suitable for use with companies’ own applications. In addition, Phi-3 Mini simply runs on conventional processors; no AI PC is involved. Even smartphones could run the model. Apple is also working on this, research it had published revealed.

More Phi-3 LLM versions to come

It doesn’t stop with the introduction of Phi-3 Mini. Soon, Microsoft also plans to release Phi-3 Small (7 billion parameters) and Phi-3 Medium (14 billion parameters) variants. When these SLMs will appear is not known.

Phi-3 Mini is now available through Azure, as well as on Hugging Face and Ollama AI platforms.