OpenAI introduced four new AI tools at its DevDay 2024 event. The tools Realtime API, vision fine-tuning on the GPT-4o LLM, Prompt Caching and Model Distillation help developers create more sophisticated AI applications, often at a lower cost.

By introducing the four tools, OpenAI aims to help developers of AI applications realize them more easily, mainly by streamlining the workflows necessary and reducing development costs.

The first tool is Realtime API, which is now in beta. This tool allows developers to build low-latency multimodal experiences into their apps. Developers can then develop apps similar to the Advanced Voice Mode speech feature in ChatGPT, for example. The tool allows developers to set up natural speech-to-speech conversations with six predefined voices.

OpenAI also announced audio input and output in the Chat Completions API, for developers who do not need the low latency of Realtime API for their applications.

The cost of text tokens for Realtime API is 5 dollars for 1 million input tokens and 20 dollars per 1 million output tokens. Audio input will cost 100 dollars per 1 million tokens, and audio output 200 dollars per 1 million tokens. That makes the cost per minute 0.06 dollars for 1 minute of audio input and 0.24 dollars for output.

Vision Fine-Tuning

The second announcement is Vision Fine-Tuning for the GPT-4o LLM. This allows developers to fine-tune their LLM using images and text. This is especially important for enhanced visual search functionality, improved object detection for autonomous vehicles and highly accurate medical analysis.

The feature is now available for the latest GPT-4o snapshot, gpt-4o-2024-08-06, within paid versions of GPT-4o. Until the end of this month, the AI giant is also offering 1 million training tokens per day for free, so developers can test GPT-4o fine-tuning with images.

Starting in November, training will cost 25 dollars per 1 million tokens, inference will cost 3.75 dollars per 1 million input tokens. Output will cost 15 dollars per 1 million output tokens.

Prompt Caching

Another new AI tool for developers is Prompt Caching in the API. With this feature, OpenAI finally follows competitors such as Google and Anthropic, which have offered this feature for some time. Prompt Caching should help developers reduce latency and costs for the API. With this service, developers can reduce their processing fees by 50 percent and also speed them up.

Prompt Caching is automatically enabled for the latest versions of OpenAI’s GPT-4o, GPT-4o mini-, o1-, and o1 mini-LLMs. Finished versions of these specific models also automatically have Prompt Caching functionality enabled.

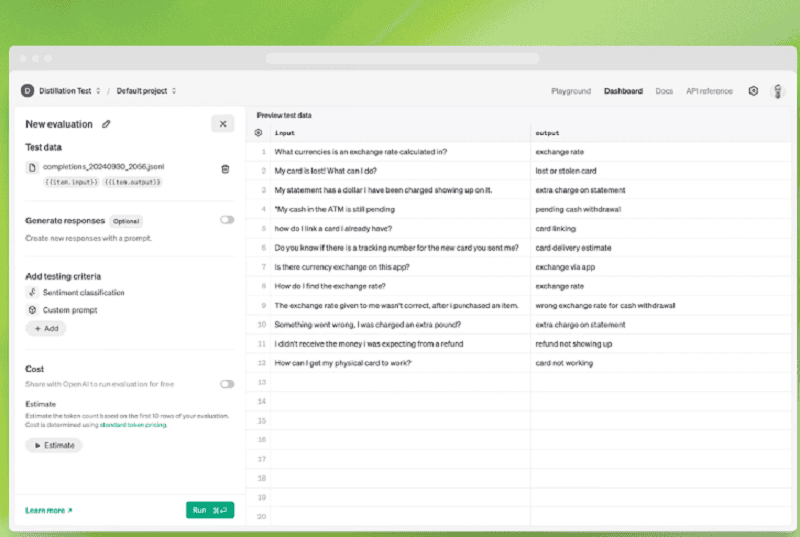

Model Distillation suite

Last but not least, OpenAI comes with a new Model Distillation suite. This suite helps developers fine-tune smaller LLMs using the outputs of the larger models. This functionality allows developers to give smaller LLMs the performance of the larger models for particular tasks, but at a much lower cost.

Whereas model distillation was previously a complicated process and often required tools that could not simply talk to each other, the now released suite allows the entire distillation pipeline to occur within the platform.

Model Distillation is available to all developers. Again, the AI giant is offering 2 million free training tokens per day for GPT-4o mini and 1 million free tokens for GPT-4o until the end of this month. Starting in November, training and running a distilled model will cost the same as current fine-tuning. During DevDay 2024, OpenAI did not announce any new LLMs.