OpenAI has just announced GPT-4.5, its latest large language model to power ChatGPT. It is the largest model ever to be released by OpenAI, although it does not share exactly how many parameters this LLM possesses.

OpenAI is boasting two major advances when it comes to GPT-4.5. First, the model uses unsupervised learning, meaning that the LLM itself discovers how to recognize patterns without requiring a human to indicate whether those patterns are correct. In addition, GPT-4.5 reasons better than ever, where OpenAI will doubtlessly have executed on its learnings with previous reasoning models such as o1 and o3-mini.

Reasoning to tackle tricky questions

These improvements offer advantages for both complex matter and average questions at the same time. Specifically, reasoning gives the model additional output tokens to generate an answer, the precise details of which are summarized by OpenAI, thus obfuscating its internal thinking process. DeepSeek, Gemini Flash Thinking and Claude 3.7 Sonnet, on the other hand, actually show this “thinking process” in its unedited form. Unsupervised learning actually reinforces the “intuition” that GPT-4.5 should possess natively.

A major problem with LLMs is that they have no real concept of reality. OpenAI refers to a greater “world knowledge” of GPT-4.5, pointing to the fact that the model more often appears to make general assumptions about the world that match reality. In addition, the AI maker states that the LLM does not think step by step as o1 does, but is “inherently smarter.” Therefore, GPT-4.5 is not a reasoning model such as DeepSeek r1 or OpenAI’s own o1.

Synthetic data

With better intuition, GPT-4.5 is intended to feel more natural to converse with compared to earlier models. The highlighted applications reflect this. Consider help with writing, programming or solving practical problems.

Despite the fact that o1 already provides comprehensive, “reasoned” answers, OpenAI shows examples of GPT-4.5 outputs that are more accessibly structured. In other words, readers get to delve into a topic without being thrown into the deep end, with the latest model guiding them through the thinking process.

Much has been said and written about AI distillation, a process by which a larger model teaches a smaller model how to answer. For example, distillations of DeepSeek R1 with far fewer parameters than it (671 billion for the full model versus sometimes only 1.5 or 8 billion) manage to reason in similar ways compared to the full model. DeepSeek was even accused by OpenAI of API abuse by generating outputs from ChatGPT and training its own LLM on them, something which is in violation of the company’s terms and conditions. OpenAI chose to go in the opposite direction, but used a similar process: small models actually helped GPT-4.5 generate better outputs using their own outputs as synthetic data.

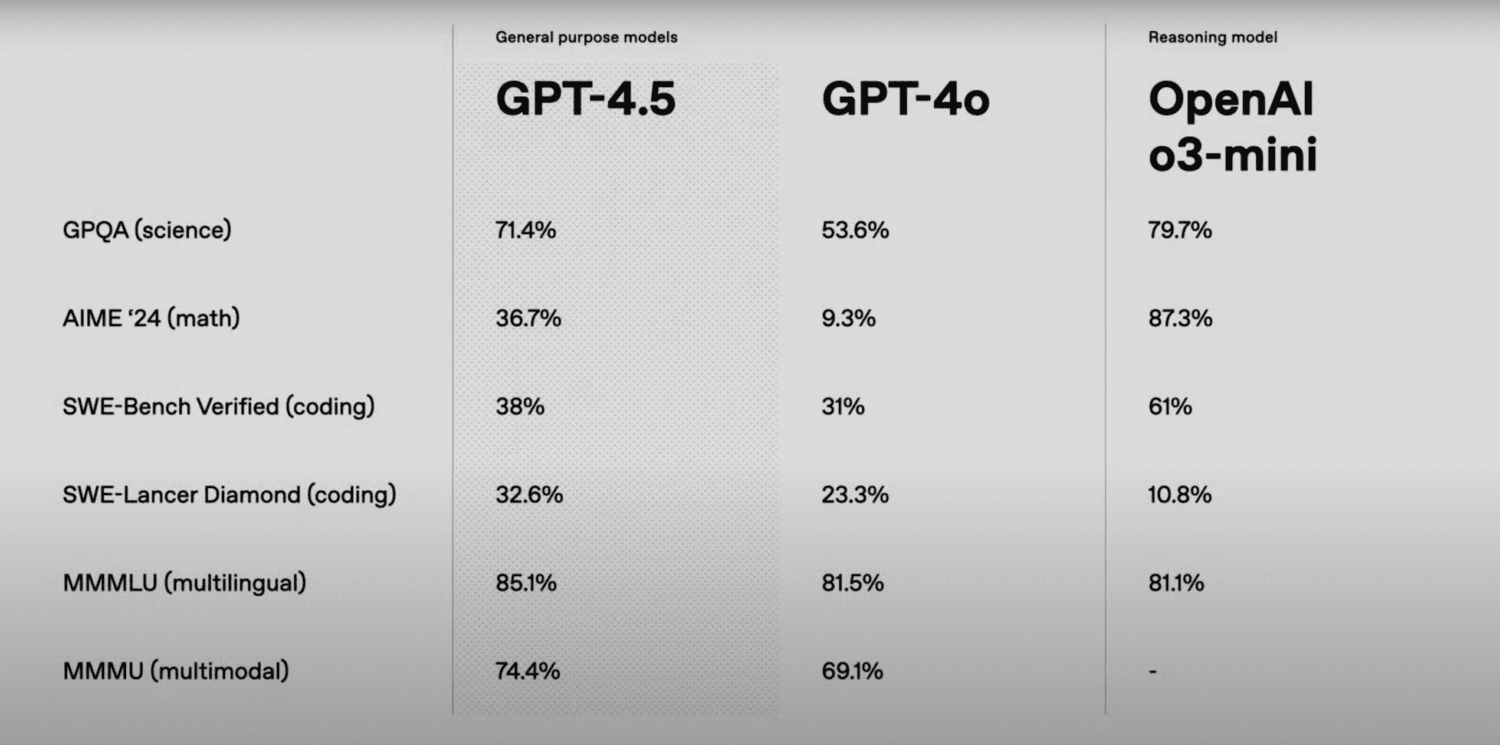

Benchmarks

OpenAI has already presented several benchmark results for GPT-4.5. SimpleQA puts LLMs to the test with all kinds of questions. GPT-4.5 scored 62.5 percent here, significantly higher than GPT-4o (38.6%), o1 (47%) and o3-mini (15%). It also hallucinated less than the competition, with 37.1 percent versus 61.0 percent for GPT-4o, 44 percent for o1 and 80.3 percent for o3-mini.

In addition, OpenAI hooks into the informal term of the day among AI testers: “vibes.” These revolve around the perceived strengths and weaknesses of an LLM, which cannot always be captured in a benchmark. A well-known example is Anthropic’s Claude, which tends to be more popular than its test results suggest. Nevertheless, OpenAI intends to make this a quantifiable thing, similar to how LM Arena does this by having two models compete for the best answer without the user knowing which LLMs are being used. Via a similar “vibe” evaluation, GPT-4.5 turns out to be meaningfully more popular than predecessor GPT-4o in a blind taste test. The real test, however, will be the mass deployment of the new model among ChatGPT users.

Post-training problems

Because OpenAI had never trained a larger model before, it had to change some of the ways in which it handled the matter. An entirely new setup was needed for the post-training phase because the ratio of available data to parameters was entirely different. Previously, there was always more data to run past the LLM, while the model’s parameters grew proportionally. The data has by now run out, to put it simply, because every AI builder has already consulted the entire accessible internet in previous training runs. You can’t really go much farther than that without bringing synthetic data into the fold and adding more complex post-training steps. We’ve already written about the problems OpenAI has faced because of this, with revelations about “Orion”, GPT-4.5’s codename, suffering from a lack of data.

OpenAI has now revealed how it has tackled this issue. The new methodology revolves around supervised fine-tuning (SFT) and reinforcement learning through human feedback (RLHF). These are familiar techniques, but OpenAI believes it has made GPT-4.5 ready for production better than any previous model. Therefore, it is not surprising that the preview phase is short-lived.

Pre-training, the initial unleashing of data on the model to set its parameters, has also been revamped. OpenAI made “aggressive” use of pre-training at low precision, which takes less computational power than higher accuracy methods do. In addition, GPT-4.5 was trained in multiple data centers simultaneously, a unique feat to our knowledge among LLMs.

Availability

GPT-4.5 will be available as a research preview model for ChatGPT Pro users and developers via the API. Plus and Team users will be getting access starting next week.

The release of GPT-4.5 is not entirely unexpected. An announcement on ChatGPT’s Android app already made clear that a preview version of this model would be available.

Also read: OpenAI comes out with ‘deep research’; the answer to DeepSeek?