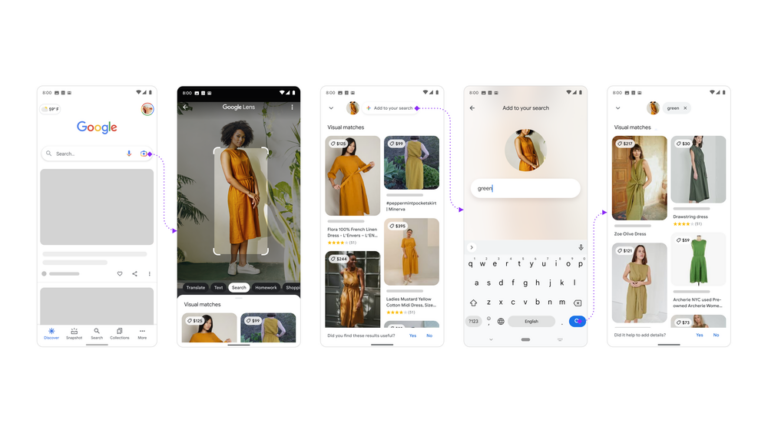

Google announced today that Google Lens now offers a new “multisearch” feature that allows users to search for results using a blend of photos and text.

Google Lens, the company’s image recognition software, allows users to aim their phone camera or take a snapshot of anything and search Google for information.

With the new tool, you may snap a photo of a garment, for example, and then put in “pinstripes” and ask Google to identify a similar shirt.

The new capabilities

Multisearch is currently available in beta-test mode in the United States, according to Google, which showcased the feature in September. For the time being, it only works with English-language users.

Users launch the Google Search app, then hit the Lens camera icon to capture a photo or select one from their galleries. Users can then swipe up and choose “+ add to your search” before typing some words.

Google provided a few samples of the new format’s capabilities. Users might, for example, ask questions about an item directly in their sight. They might also narrow down search results by taking a picture of an item and specifying a particular color, brand, or other aesthetic aspects.

Ideal for shopping

Multisearch, according to the corporation, is ideal for eCommerce queries, but it also has other applications. For example, if someone buys or receives a plant and isn’t sure what it is, they take a picture of it and add the words “care instructions” to learn how to care for it.

The feature has the potential to be beneficial. A consumer might see a dress in a store and want to buy it. If, for instance, the store doesn’t have it in the color she wants, she could just take a picture of it and use Google Lens to look for a “red” variant of the garment.

Also read: Google releases 100th version of Chrome