Nvidia unveiled the H200 chip at the Supercomputing 2023 event. Normally, a memory upgrade of Nvidia hardware would cause little controversy. However, the GPU manufacturer is launching the new H200 in a market with pretty much unlimited demand. More and faster memory isn’t an all-too drastic change in specifications, but will leave TSMC’s factories working overtime.

Nvidia is building on the H100 with the H200, utilizing the same Hopper architecture as its underlying design. The GPU itself also presumably features the same numbers of CUDA and Tensor cores, although that has not yet been confirmed.

Where the change occurs is in memory. Whereas the H100 used 80GB of HBM3, the H200 contains 141GB of HBM3e. With that, the GPU uses a larger memory bus (6144-bit versus 5120-bit). Memory bandwidth has grown as well, from 3.35TB/s to 4.8TB/s.

LLM specialist

Despite the H200 not launching until the second quarter of 2024, there’s some conclusions to draw from the details Nvidia has shared. The largest LLMs on offer are currently constrained by the 80GB of VRAM (video memory) and the speed of training is partly determined by the memory bandwidth. With a 76 percent increase in VRAM and 1.45TB/s of additional bandwidth, an LLM’s training process will be completed significantly faster. Incidentally, the 141GB is just short of the theoretical maximum of 144GB that the Hopper architecture would support. The 3GB of slack also allows Nvidia to deploy some disabled memory modules, resulting in better yields.

Tip: HPE introduces first system with Nvidia GH200 Superchip

Specifically, the H200 looks like the GPU portion of the GH200 Superchip, as Anandtech noted, which was first introduced by HPE yesterday in a complete system. That Nvidia product is a combination of an Arm-based CPU and GPU. However, the HBM3 memory differs significantly from the new HBM3e, as mentioned earlier.

Compatible

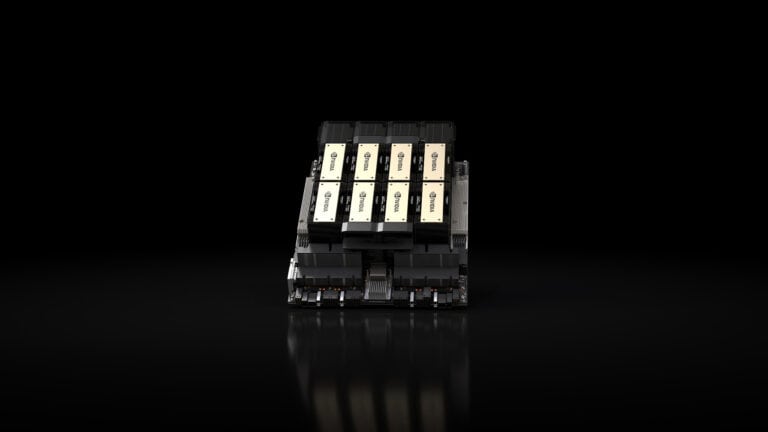

Since the H100 has sold for around 40,000 euros apiece this year, few parties will be able to buy these coveted GPUs when they arrive next spring. Those who do have the cash to spend (and usually buy multiple GPUs at once) can at least upgrade to the H200 if they’re already on an H100. The chip is fully compatible with existing servers that can house up to 8 identical GPUs.

With the H200, Nvidia is bringing a not too drastic refresh to its existing product line. Still, demand will remain undiminished as long as the AI hype continues. It remains to be seen when Nvidia decides to introduce its successor (codename “Blackwell”), which outside of Nvidia is only known through rumors for now.

Also read: Intel says it’s the only one who can take on Nvidia’s AI hardware