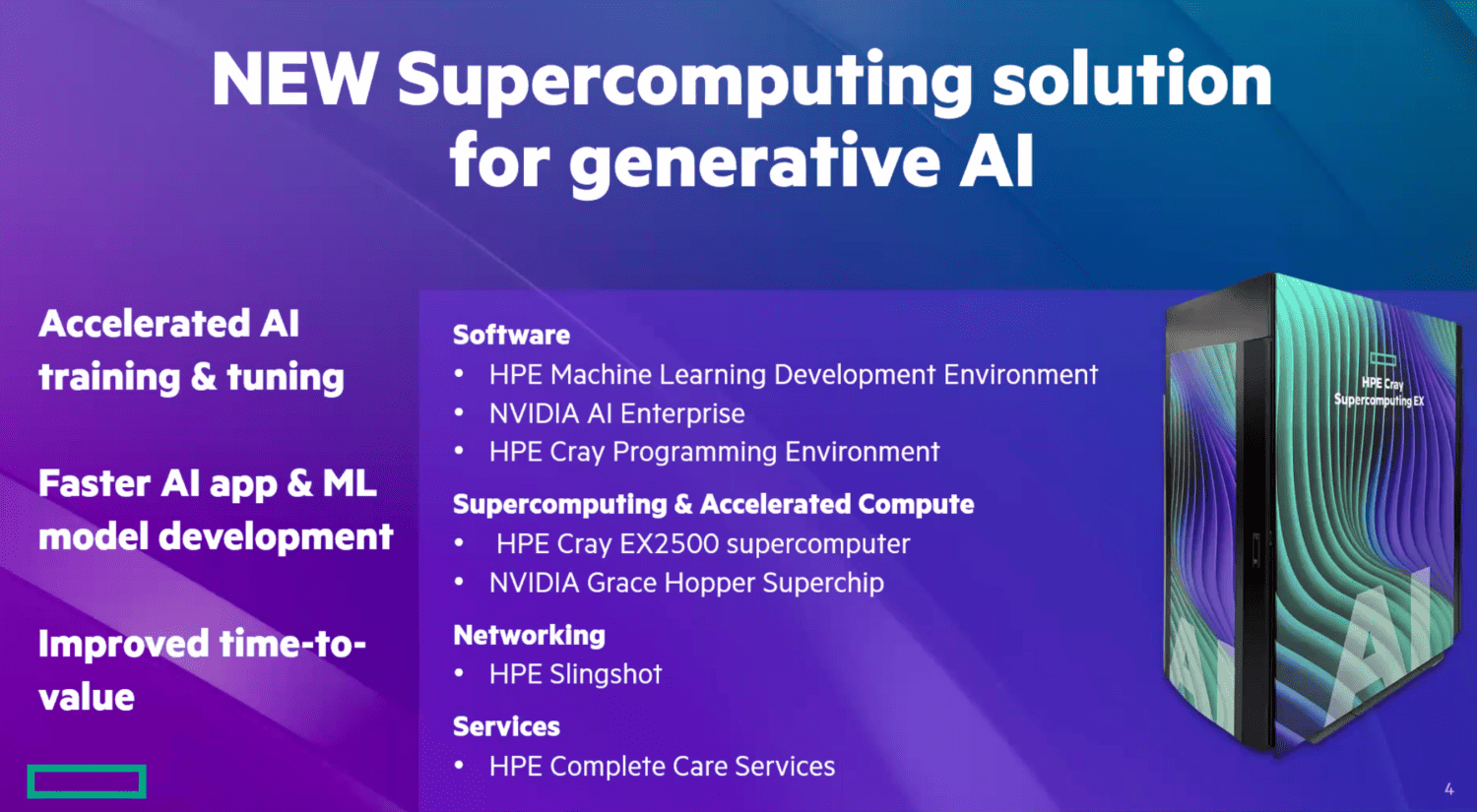

New solution focuses on supercomputing, works out-of-the-box and should enable organizations and institutions to quickly get started with AI.

Hewlett Packard Enterprise today announced an extraordinary new system. It has not been given a proper name as far as we have seen, but is referred to as a supercomputing solution. Specifically, it is a solution for deploying generative AI relatively quickly. It is, in fact, a complete solution. That is, HPE provides the full stack. This includes the storage, network connections, the compute, as well as the software that customers can get started with.

Also read: HPE enters AI market with HPE GreenLake for Large Language Models

The included software, by the way, is not just put “on top” of it. It is integrated into the system. This mainly involves HPE Machine Learning Development Environment, but also HPE Cray Programming Environment. The former is designed to let customers build AI models faster through integrations with popular ML frameworks. The second piece of software enables developers to develop code on their own.

Cray EX2500 combined with Nvidia Grace Hopper (GH200) Superchip

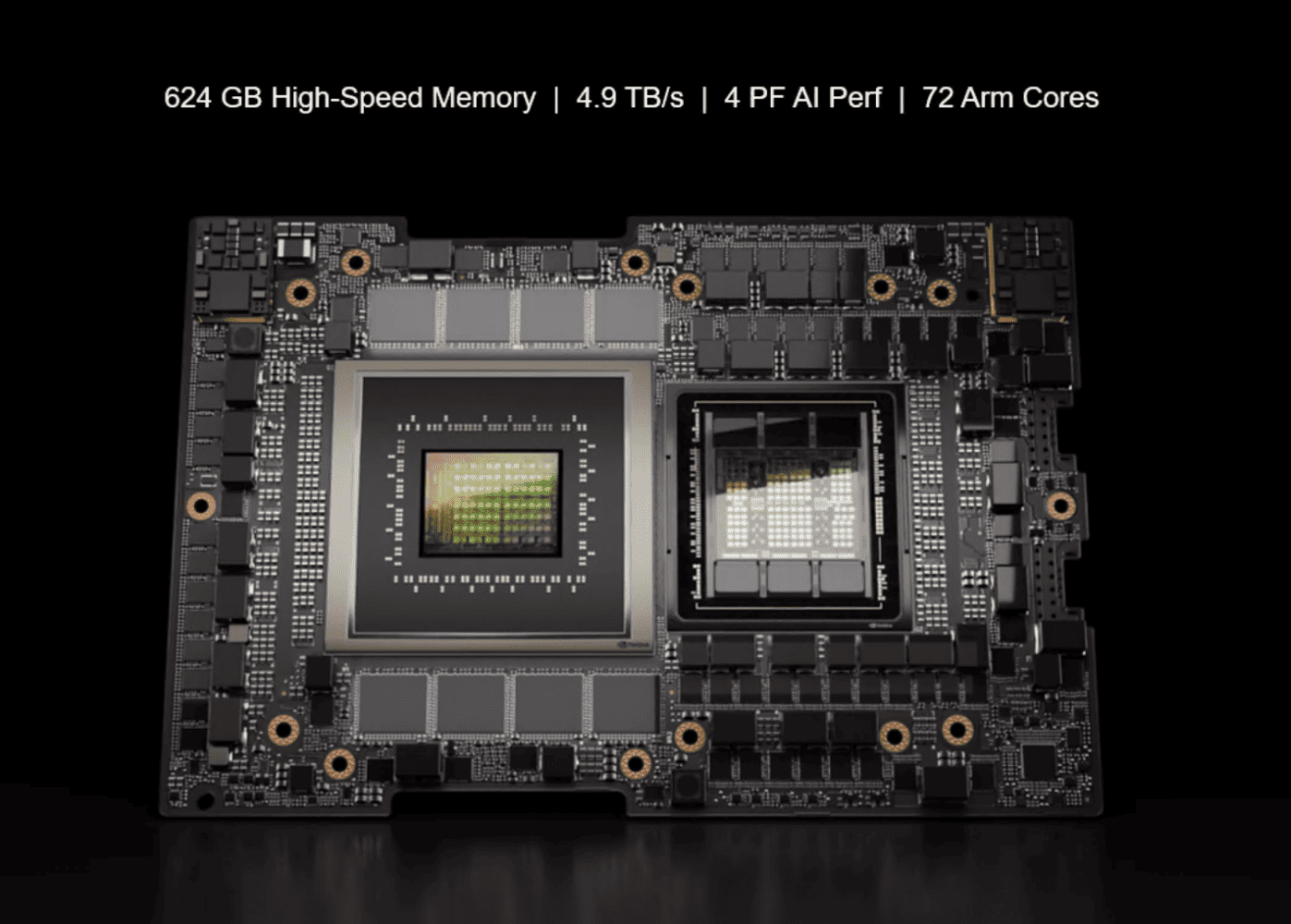

When we talk about “the system” above, we are talking about a special configuration. It is an HPE Cray EX2500 combined with an Nvidia Grace Hopper Superchip (GH200), a combination of CPU and GPU. This makes it the first solution on the market that includes the Nvidia GH200. Finishing it off is an Ethernet-based interconnect, HPE Slingshot. This was developed with so-called exascale workloads in mind. That means that it has to be super-fast and thus makes the system as a whole super-fast as well. Put another way, network performance should not hold back the performance of the system as a whole. That has at times been a bottleneck.

Also read: Nvidia’s GH200 Superchip in production, already in use at Big Tech

The collaboration between HPE and Nvidia is a serious step forward. Not even so much in terms of performance on generative AI models, but mainly because of the fact that it is all offered in a complete solution. It makes training AI models more accessible. It will not be a cheap solution, but one that promises to be significantly faster than systems in which Nvidia provides only the H100.

For now, Nvidia has not officially attached a price to the GH200 Superchip. HPE also does not mention a price for the new solution in their official communication. It does say that the new solution will be generally available in more than 30 countries starting in December 2023. Which ones specifically those are, is also not yet known (at least not to us). The target audience for this new solution seems to be mostly research institutions, government agencies, but according to HPE also large enterprise organizations.