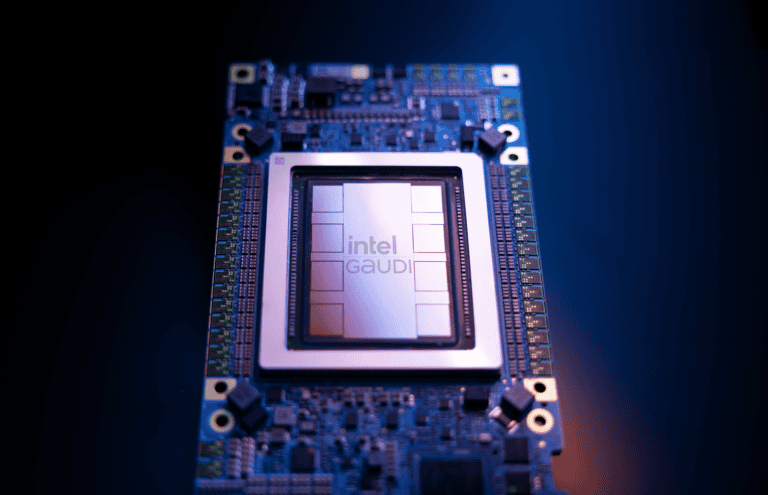

Intel has just unveiled its new Gaudi 3 AI chip. With this data center GPU, the company is positioning itself as the main alternative to Nvidia for completing AI workloads as quickly as possible.

Compared to the Gaudi 2, the latest model offers significant performance improvements despite having a similar architecture. AI computations run twice as fast at FP8 precision, while they can be run up to four times faster for more accurate BF16 workloads. Bandwidth for networking (2x) and memory (1.5x) has also increased substantially.

Cheaper alternative

These are promising numbers for the Gaudi 3, while its predecessor already had good credentials. Although Nvidia chips were much faster in AI benchmarks, the price tag of these GPUs was not commensurate with the performance gains. In short, Intel was already considered a relatively affordable AI alternative with shorter wait times for ordered servers. That is not expected to change now that Nvidia has the Blackwell series of GPUs and Intel is pushing Gaudi 3 forward this year.

Intel repeatedly compares Gaudi 3 to the Nvidia H100: a single Gaudi 3 trains Meta’s Llama 2 model 50 percent faster, the same performance difference as for inferencing. Compared to the H200, the upgraded model of the H100, the inferencing gain is 30 percent.

While the architecture has not changed, the chip process has. Instead of using a 7 nanometer node, Intel builds Gaudi 3 at 5 nanometers. Nevertheless, the piece of silicon is larger than before. Indeed, each Gaudi 3 consists of a pair of linked “tiles,” strikingly the same architectural choice as Nvidia made for its Blackwell series. The similarities go even further: the interconnect, just like in Nvidia’s offering, is fast enough to allow the GPUs to operate as one chip.

Surrounding the two chips is 128 GB of High-Bandwidth Memory (HBM2e). This allows larger AI models to run without performance degradation. The new 3.7 TB per second of bandwidth on it is much faster than Nvidia’s H100. However, it’s less than half the HBM3e memory bandwidth in the new B200 (8 TB/s).

Open versus proprietary

Part of Intel’s message around AI is not about hardware performance. The company advocates open standards to drive AI, as opposed to supporting proprietary tools. This is a jibe at Nvidia, which has built an entire software ecosystem around its proprietary CUDA. Translation layers such as ZLUDA allow workloads developed for CUDA to be run elsewhere. However, Nvidia is actively combating the use of such implementations.

Many developers are used to the CUDA way of working by now, relying on compatibility with roughly 90 percent of all GPUs in data centers. An open standard would make a move to competing products from Intel and AMD easier. Those using TensorFlow or PyTorch to run AI, though, can already move seamlessly to a Gaudi 3 chip.

Intel is paying close attention to the scalability of Gaudi 3. A single server node offers 14.7 Petaflops, while clusters with 1024 nodes have 15 Exaflops of theoretical performance to deliver. Connectivity is via Ethernet; Intel has no proprietary alternative to Nvidia’s InfiniBand technology for this. It means slower AI training, but broader compatibility for server farms. The debate over what kind of solution is desired is far from over.

Also read: Nvidia solidifies AI lead at GTC 2024 with Blackwell GPUs