Meta recently introduced its own processors,a data center design and a supercomputer for AI workloads. With this, the tech giant wants to get a piece of the pie when it comes to AI solutions and applications, but also maintain control.

Meta is feeling the pressure from other tech giants when it comes to AI developments and wants to catch up. Therefore, the tech giant recently made a number of important announcements, especially in the area of hardware. With these, Meta says it wants to gain and maintain control over its entire proprietary AI infrastructure stack.

Processors and data center design

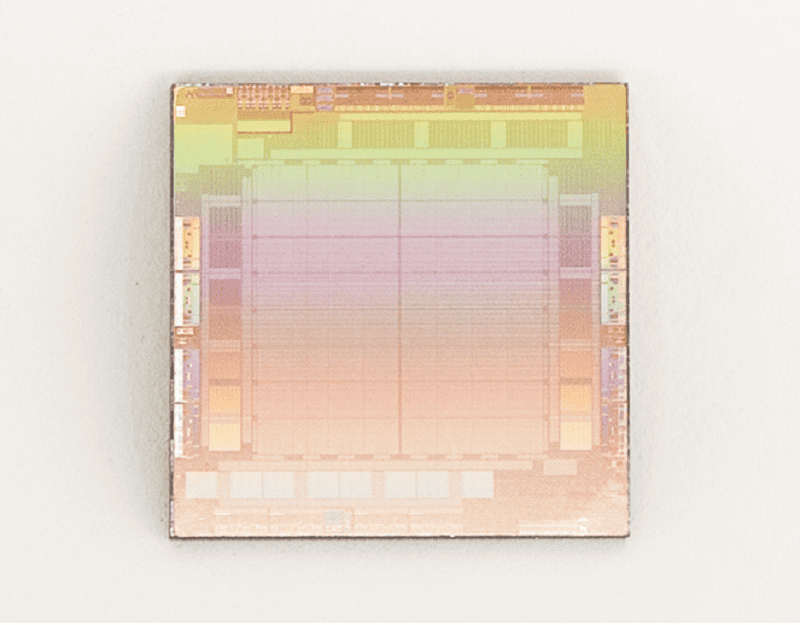

The most significant announcement is the arrival of its own AI workload processors. The Meta Training and Inference Accelerator (MTIA) focuses specifically on inference workloads and should offer more compute power, better performance, less latency and more efficiency for this purpose.

In addition, this ASIC accelerator is fully adapted to Meta’s own internal workloads. The MTIA accelerator will soon be used in conjunction with GPUs, most likely Nvidia GPUs, it is planned.

The tech giant is also introducing a new data center design. This design should better accommodate the deployment of AI hardware for both training, and inference. Think liquid-cooled AI hardware applications and a high-performance AI networking environment connecting thousands of AI processors in large AI training clusters.

Supercomputer

Last but not least, Meta announced the arrival of the Research SuperCluster (RSC) AI Supercomputer. This, according to the tech giant, is one of the fastest AI training supercomputers in the world. The supercomputer consists of 16,000 GPUs and a 3-level Clos network fabric that provides bandwidth for 2,000 training systems.

Also read: Meta slashes water use in data centers