AWS is introducing Amazon EC2 Capacity Blocks for ML. The new service gives enterprises easy access to cloud-based GPU compute power for short AI workloads.

Companies seeking compute power for short AI workloads can now get it from AWS with Amazon EC2 Capacity Blocks for ML. This, according to AWS, saves them from having to purchase this capability themselves for longer-term workloads. That method is usually not cost-effective, as it often doesn’t get enough use to make financial sense. In addition, customers do not always get access to the much-needed Nvidia GPUs for their AI workloads.

Introducing Amazon EC2 Capacity Blocks for ML

With AWS’ new service, customers can solve this problem. The service reserves capacity for customers on hundreds of Nvidia H100 GPUs hosted on a colocation basis in the Amazon EC2 UtraClusters for high-performance ML workloads.

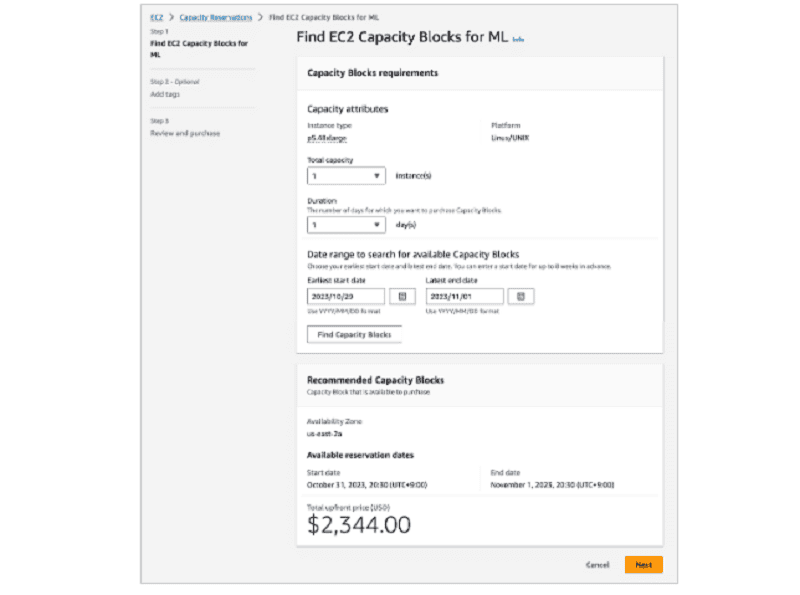

To access these Amazon EC2 Capacity Blocks for ML, users choose the desired cluster size, future start date and required duration for workloads. This provides predictable and immediate access to the GPU resources required for AI projects. AWS compares the consumption model to a hotel reservation for a specific length of stay, but for GPU instances for AI projects.

The Amazon EC2 Capacity Blocks service is also akin to an old-fashioned mainframe architecture, according to experts. Those computing environments were once deployed as “timeshare computers” that supported hundreds of users simultaneously for different workloads.

Availability and pricing

Current AWS customers can now reserve GPU capacity within Amazon EC2 Capacity Blocks for ML via the AWS Management Console, CLI or SDK. The service is first available in the AWS US East (Ohio) region. The number of AWS regions will continue to expand in the near future. However, the cost of using the service is not too low, according to the released overview.

Also read: AWS launches standalone sovereign cloud in Europe