ChatGPT inadvertently stored conversations of other users in the conversation history of another user. This poses a security problem for other users, as passwords, among other things, were leaked. The leak was disclosed to the editors of Ars Technica.

Update 31-01 (Erik van Klinken): It is now known that according to OpenAI, the data was leaked because an account had been taken over. This news item has been updated to include this fact.

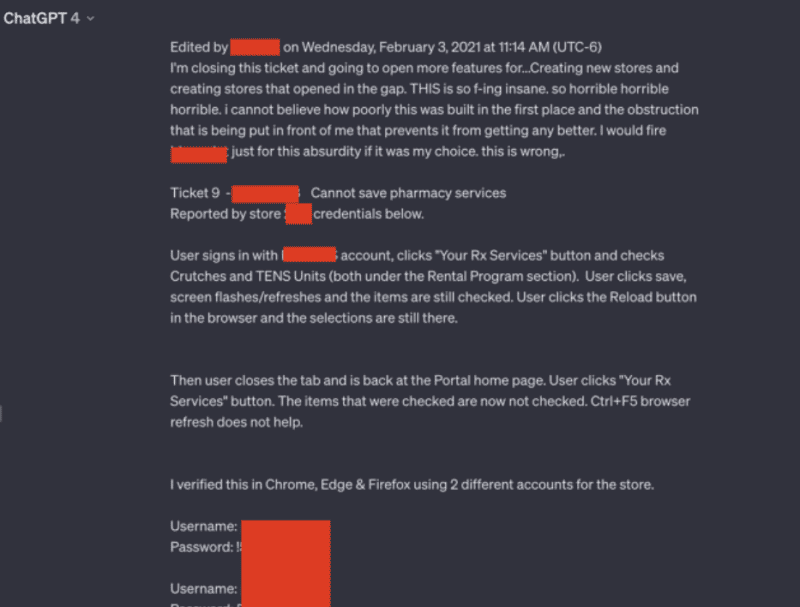

Ars Technica said it received screenshots from a reader in which it became clear that ChatGPT placed unsolicited conversations of other end users in its conversation history. The conversations were fully visible and appeared to track a large number of login credentials and other personal details of users unrelated to this incident.

More specifically, they involved usernames and passwords coming from a support system that was being tinkered with at the time. In doing so, an employee of the company in question would use the AI chatbot to resolve these issues.

In addition, conversations about a set-up presentation, details of unpublished research and a PHP script also appeared in the affected end user’s conversation history.

Previous incidents

This is not the first time such an error has occurred at ChatGPT. Back in March 2023, OpenAI shut down its AI chatbot after a bug caused titles of an active user’s chat history to become visible to other unrelated end users. This incident differs from that because it involved a compromised account. It does show that OpenAI doesn’t offer users the tools to prevent such takeovers, such as MFA and an added check for IP location.

In November 2023, researchers discovered that prompts in ChatGPT allowed them to scrape e-mail addresses, phone and fax numbers, physical addresses and other private information from data used to train the underlying LLM.

Problem as old as the internet

According to researchers, the surfacing of users’ data in other accounts is as old as the internet. Researchers say this is due to the use of so-called “middleboxes”. These reside between front- and back-end devices and cache data for better performance, including the login credentials of users who are logged in. In case of mismatches, the login data from one account may then be transferred to another.

OpenAI says it is investigating the recent incident surrounding the surfacing of another chat history.

Also read: ‘ChatGPT creates mostly insecure code’