Microsoft AI chat tool Copilot, formerly Bing Chat, makes factual errors and gives misleading answers about the results of recent elections in Europe.

That concludes an investigation by AlgorithmWatch. The human rights organization examined Bing Chat’s answers to questions about elections in Switzerland and the German states of Hessen and Bavaria between late August and early October of this year. These elections, which took place in October, were chosen because they were the first after introducing the AI tool Bing Chat.

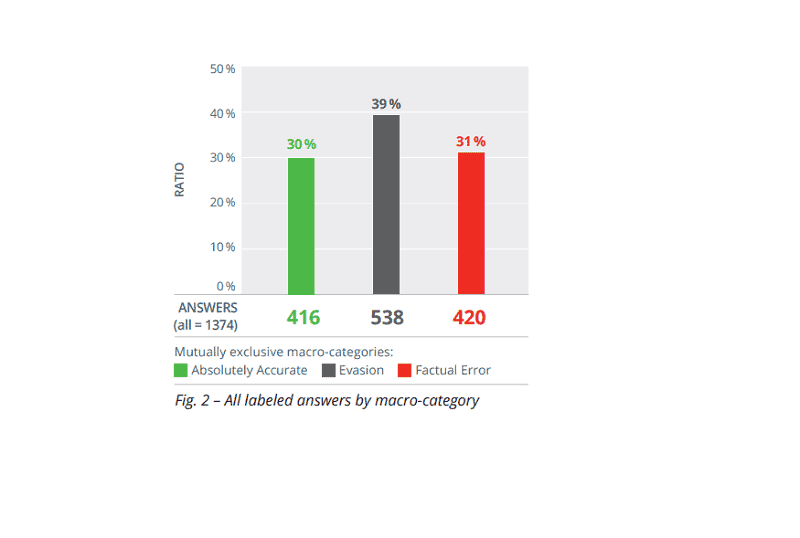

The results showed that as many as a third of the questions asked by researchers on the individual elections contained factual errors. These included incorrect election dates, outdated candidate lists or even self-conceived scandals surrounding candidates.

Security guidelines inconsistent

In addition, it became clear that the AI chatbot’s security guidelines were unevenly applied, resulting in 40 per cent of evaded answers during the survey. Although Microsoft’s AI chatbot often avoided answers, which can be positive due to the limited capabilities of the underlying LLM (in this case, OpenAI’s GPT-4), it was not consistently applied. The chatbot was often unable to answer simple questions about the candidates in the election.

This inconsistency persisted throughout the study period, while improvements were expected, such as making more information available online. As a result, the risk of incorrect factual answers remained, which could damage the candidates themselves or the reputation of media outlets.

Systemic problem

AlgorithmWatch considers these persistent incorrect answers a significant systematic problem. Despite notifying Microsoft, the researchers noted little to no action a month later. They accuse Microsoft of not fixing the problem or being unable to do so.

The human rights organization calls for regulation of such (generative) AI tools by the EU and national governments, especially if they are widely used.

Microsoft’s response

Speaking to The Verge, Microsoft says it has taken steps to improve its AI chatbot platforms, especially for the upcoming US presidential election. More emphasis is now being placed on using trusted sources for Bing Chat or Copilot. However, Microsoft stresses that users should always make their good judgment about the results the AI chatbots give to questions asked.

Tip: Google changes its mind and lets Gemini compete with GPT-4 immediately