Cloudera is introducing six new accelerators that customers can use, in combination with its own data, analytics, and Cloudera AI platform, to accelerate the development of machine learning (ML) projects. The goal is to reduce the so-called time-to-value of these business AI applications.

Cloudera wants to help companies get the most value from their business AI projects faster. This by providing its customers with the latest AI techniques and use cases, with which they can further shape and support the integration of AI into their processes. So that this ultimately produces more impactful results.

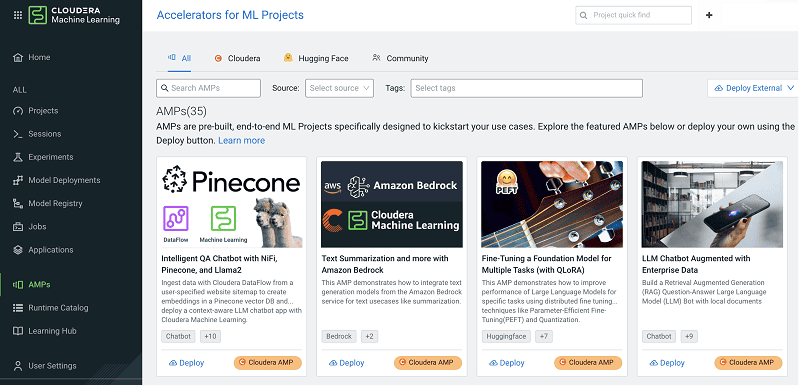

To this end, several new accelerators for ML projects, AMPs, are now being introduced. These AMPs are essentially complete ML-based projects that can be implemented easily from the Cloudera platform within companies’ environments.

Best practices

An AMP includes best practices for addressing complex ML projects. These best practices consist of workflows that enable seamless transitions no matter where customers want to deploy these examples or data.

Cloudera AMPs are completely open-source and include proper instructions for implementation in any environment.

Six new AMPs

The AMPs now released should ultimately make Cloudera AI’s capabilities more accessible, allowing end users to accelerate its adoption while maximizing the value of their own data and the AI output generated.

Cloudera is now introducing Fine-Tuning Studio as its first AMP. This application should provide end users with a complete “ecosystem” for managing, optimizing, and evaluating LLMs.

The AMP RAG with Knowledge Graph helps feed a Retrieval-Augmented Generation (RAG)- based application with knowledge graphs. This is to capture better relationships and context that are not easily accessible through vector stores alone.

In addition, the PromptBrew AMP provides help for creating high-performing and reliable prompts through a user-friendly interface. The also introduced Document Analysis with Cohere CommandR and FAISS AMP shows RAG with CommandR as LLM and FAISS as a vector store.

Finally, the Chat with Your Document AMP or accelerator helps improve an LLM’s responses by using context from an internal knowledge base where the user can store personal documents. This AMP builds on the earlier LLM Chatbot Augmented with Enterprise Data AMP released by Cloudera.

The new AMPs can be downloaded via Cloudera’s own GitHub environment.

Also read: Cloudera acquires AI tool developer Verta