At its Advancing AI event in San Francisco, AMD is operating at full throttle. The company’s new announcements spanning Epyc, Instinct, Ryzen, and networking demonstrate that it has become an industry force that cannot be ignored.

Many details about “Turin,” AMD’s codename for its fifth-generation Epyc chips, were already public knowledge. The release focused on three key objectives: raw performance, efficiency, and comprehensive AI capabilities. AMD unifies all of its portfolio through the term “end-to-end AI”, but that all begins with the server chips that have now laid claim to 34 percent of the market’s revenue.

An Epyc for every use-case

As always, Epyc is facing competition from Intel this year. Intel’s current Xeon lineup consists of two types of server chips: one featuring exclusively performance cores, and another with only efficiency cores.

AMD is adopting a similar strategy, though with its own distinct approach. One variant offers 64 cores and achieves the coveted 5 GHz clock speed threshold for the most demanding workloads. Additionally, customers can choose between a 128 Zen 5-core version and an Epyc chip featuring 192 slightly more efficient but less powerful Zen 5c cores. Power consumption varies significantly, occasionally reaching up to 500 watts.

Instinct MI325X and M355X

On the GPU front, AMD today unveiled the MI325X, an evolution of the MI300X, which has been Nvidia’s H100’s strongest competitor so far. Just as the MI300X outperformed Nvidia’s H100 in AI inferencing, the MI325X continues this trend by surpassing the H200.

The GPU comes equipped with 256GB of HBM3E memory. Many customers will opt to purchase their MI325Xs in “Universal Baseboards” configured with eight units. While inferencing performance is impressive as noted, AMD acknowledges it still has room for improvement in training capabilities. According to AMD’s claims, training performance is approximately on par with Nvidia’s H200.

AMD also provided a glimpse of the upcoming Instinct MI355X, scheduled for release mid-next year. The specifications are impressive, with its standout feature being the ability to run an AI model with 4.2 trillion (!) parameters across eight MI355Xs. For perspective, OpenAI’s GPT-4 reportedly contains 1.8 trillion parameters.

Ryzen AI PRO: the most powerful NPU (for what it’s worth)

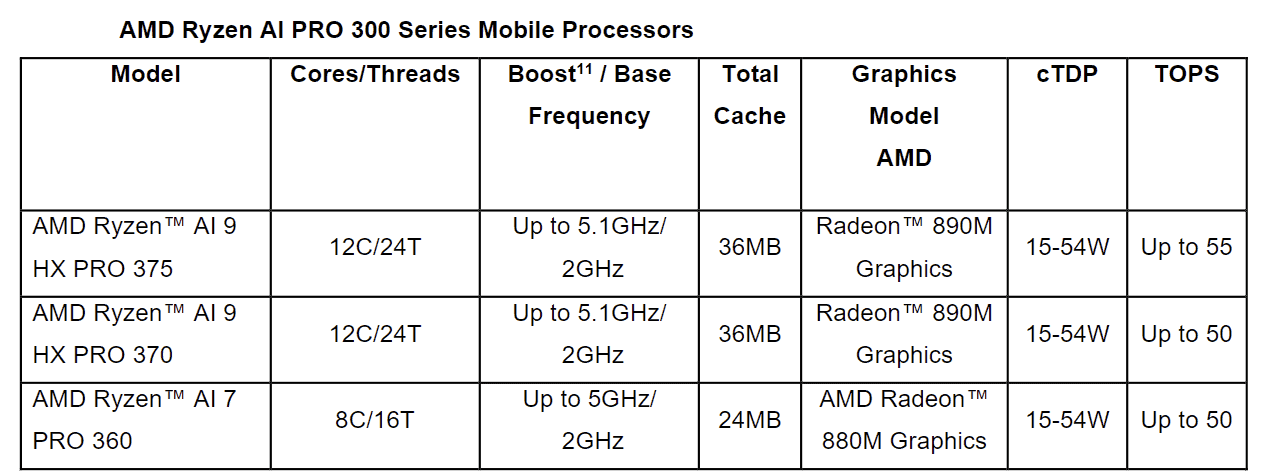

The Ryzen AI PRO 300 series focuses specifically on smaller, locally-run AI tasks. Following the well-received AI 300 series, AMD is now launching its business variant. Three CPUs are being released this month for laptops.

While the term “AI PC” has finally shed its obtuse connotations, AMD is already advancing to a new concept: the “next-gen” AI PC. This specifically refers to AI TOPS, measuring trillion calculations per second that a chip can process. AMD’s chips achieve 50 or 55 TOPS, surpassing competitors Intel and Qualcomm.

How significant is this advantage? Intel downplays its importance, while AMD equates it to having a more powerful CPU or GPU. Additional power translates to faster calculations, resulting in reduced load times, quicker responses, and ultimately a more refined user experience.

Networking: new DPU and NIC

The networking aspect of the AI revolution has been largely overlooked, but it’s an area AMD has been increasingly focused on. While the complete story is more extensive than we can cover in this initial overview, the company’s ambitions are substantial.

AMD is now shipping a new DPU: the Pensando Salina 400. Its success relies not only on powerful performance but also on its programmability, allowing for post-deployment network optimizations. AMD argues this will be crucial, especially as front-end networks face increased demands from AI workloads.

Simultaneously, AMD is introducing the Pensando Pollara 400, the first NIC designed for the upcoming Ultra Ethernet Consortium 1.0 standard. We’ll explore AMD’s ambitious vision in this area in more detail later. For now, it’s worth noting that the Pensando Pollara 400 delivers speeds up to six times faster than previous NICs and features programmable network congestion control for optimal network utilization.

Conclusion: a little bit of everything

When AMD announced its “Advancing AI” event, many details were kept under wraps. The significance of this product launch series makes the company’s discretion surprising. Every segment has noteworthy developments: Epyc, Instinct, Ryzen, and networking.

Techzine is on-site to gather more information about these announcements. One thing is clear: AMD is making significant strides, expanding substantially across all segments.

Also read: AMD takes logical step to unify GPU designs across datacenter, consumer