Many GPUs are vulnerable to leaking data from AI models. An attacker only needs access to the operating system of the device within which the GPU is running.

Researchers from security firm Trail of Bits explain to Wired that GPU vulnerabilities are becoming increasingly urgent. AI data in chunks ranging from 5 to 180 MB can be stolen from video memory if an attacker has access to the OS.

The vulnerability has been dubbed “LeftoverLocals” by Trail of Bits. Despite the fact that modern systems typically split their data in system memory to prevent leaks, LeftoverLocals can bypass this. AI models must be able to insert themselves entirely within a GPU’s memory to run on it. This means that advanced models typically rely on powerful hardware. However, Apple recently revealed techniques to enable Meta’s Llama 2-7B model on an iPhone, for example.

Read along

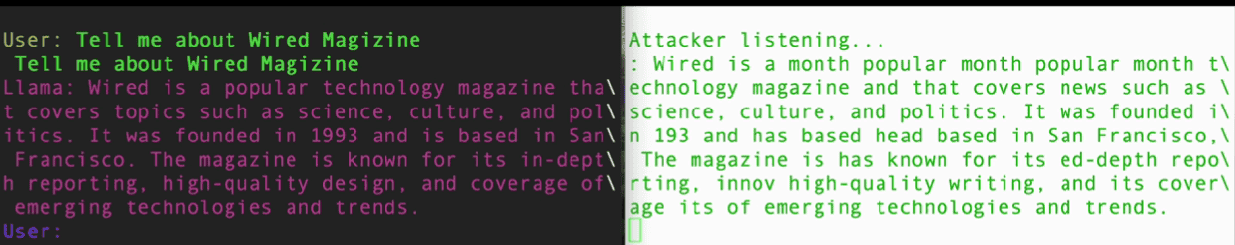

Trail of Bits showed a proof-of-concept in which a simulated attacker can “listen in” on AI outputs. Although the leaked data does not fully match the actual output, it is still almost entirely readable.

The attack program consists of less than 10 lines of code and work on GPUs from Apple, AMD and Qualcomm. Intel and Nvidia chips do not appear to be susceptible. It means that many Android phones, iPhones, iMacs, MacBooks and systems with AMD video cards are exploitable by LeftoverLocals.

Apple has already patched the vulnerability for the most recent M3 Macs, and MacBooks, in addition to the iPhone 15 series. Older Apple laptops and desktops on M2 or M1 chips have not yet received a patch, and neither have earlier iPhones.

Qualcomm and AMD are still working on an update. On Wednesday, AMD released a security advisory about the topic, stating that driver updates will be released in March to fix the problem.

GPU protection below par, not the same attention as CPU leaks

The researchers stress that this particular leak raises a broader security issue. The fact that attackers can relatively easily steal large portions of GPU memory data shows that there aren’t the same layers of safeguards that apply to system memory. RAM data cannot be accessed in the same way as VRAM data, with CPUs being designed to avoid this type of data extraction. As GPUs take on a more important role within IT infrastructures due to the rise of AI, it will become more attractive for attackers to steal data in video memory. For now, such leaks may entail AI outputs that are almost wholly readable, but if stolen, data from a proprietary LLM could be highly valuable.

Also read: API use increases significantly, but poses greater risks