On Wednesday automatic transcription service Otter, announced a new AI-powered chatbot. The company has designed it to revolutionize meeting interactions and foster collaboration among participants.

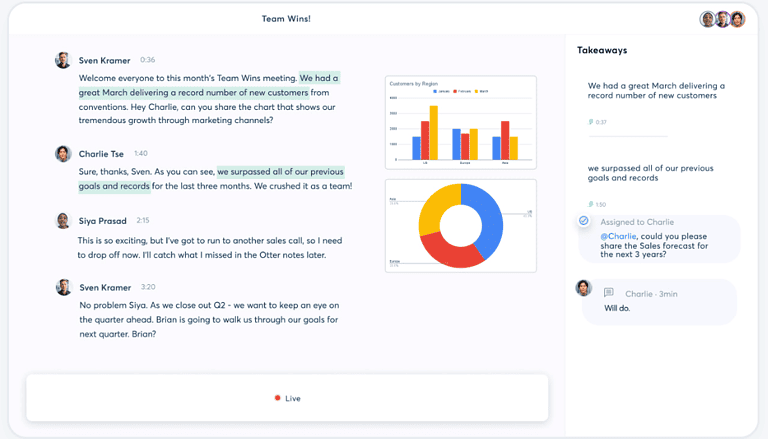

The Otter AI Chat aims to assist users by providing a platform to ask questions during and after meetings, generating follow-up emails with actionable points, and offering contextual answers based on the meeting discussions.

With the ability to catch up on missed content by simply inquiring, “I’m late to the meeting! What did I miss?” Otter AI Chat should ensure that no one feels left out or uninformed.

The selling point

Unlike other chatbots focusing solely on one-on-one conversations, Otter’s can cater to multiple individuals. This also means it enables team members to tag each other for clarification or action items. Previously, users could achieve this through comments on the transcript.

In a similar move, Zoom announced a comparable feature earlier this year. There, it provides meeting summaries for latecomers. Otter’s chatbot, however, aims to go beyond by leveraging the power of AI to deliver intelligent responses aligned with the context of the conversation.

It remains unclear whether Otter trained the chatbot using the vast amount of transcribed data the company holds. This currently amounts to over 1 million spoken words per minute.

The company assures users that chat data won’t be shared with third parties. The development, which will roll out to users in the coming days, follows Otter’s introduction of the OtterPilot bot in February, which automatically emailed meeting summaries to participants and included pertinent images of slides within the meeting notes and transcription.

By introducing the Otter AI Chat, the company aims to add an intelligence layer to its existing AI-powered notetaking feature, enabling users to make more complex inquiries.

The inclusion of AI-generated meeting notes and summaries has become increasingly prevalent in meeting-related tools, thanks to advancements in Large Language Models (LLMs).

Also read: ElevenLabs takes small step toward combating audio deep fakes