Nvidia has introduced the free Chat with RTX tool, which allows PC users to build a personalized chatbot. This is based on Windows and an integrated Nvidia GeForce RTX 30 Series GPU or more recent Nvidia GPUs.

Chat with RTX allows users to build a chatbot on their PC, not in a cloud environment. This chatbot uses the content present on the PC, thus enabling users to use personal AI functionality.

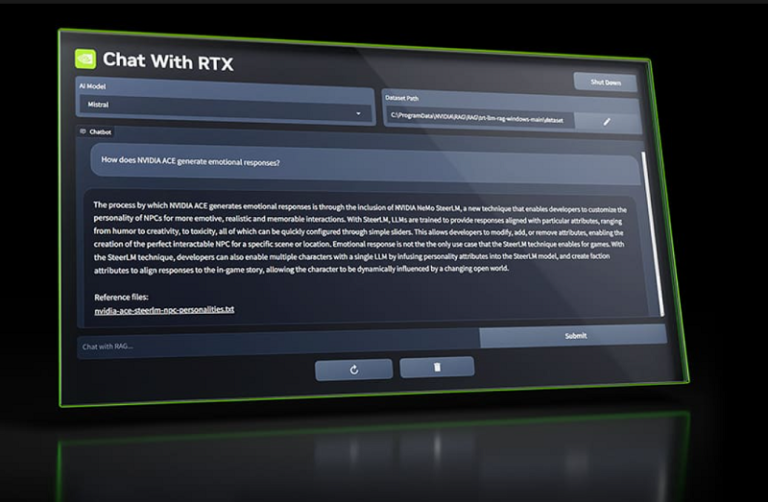

The tool connects local files as a dataset to open-source LLMs, such as Mistral or Llama-2. It is then possible to get quick and contextually relevant answers with prompts.

Supported file formats include .txt, .pdf, .doc/.docx and .xml. Users can also upload URLs of YouTube videos and playlists. Users can add its content to the chatbot through a YouTube URL and use it for contextual search queries.

Benefits local AI chatbot

Creating and using a personalized AI chatbot offers benefits, according to Nvidia, such as allowing users to control what content the tool generative AI assistant has for its responses.

In addition, the generative chatbot learns only the private dialogue with the user, and thus, dialogues are not used for optimization, as happens with cloud-based AI chatbots. This gives more privacy. Also, a personalized and local AI chatbot generates faster responses because latency is absent.

Regarding technology, Nvidia’s Chat with RTX tool uses retrieval-augmented generation (RAG) technology, the Nvidia TensorRT-LLM software and Nvidia RTX acceleration, among others, to provide generative AI functionality.

An essential requirement for the tool is that it runs only on Windows 11-based desktops or laptops. In addition, these desktops or laptops must have at least an Nvidia GeForce RTX 30 Series GPU or more recent GPUs, such as the Nvidia RTX Ampere or Ada Generation GPUs on board.

Furthermore, at least 8 GB of VRAM and 16 GB of RAM and driver version 535.11 or later are required.

Develop your own applications

Furthermore, Chat with RTX users can use the tool to develop their AI applications. This is with the help of the TensorRT-LLM RAG developer reference project available on GitHub. This reference project allows developers to build and deploy their RAG-based applications for RTX. In doing so, these are supported by NVIDIA’s TensorRT-LLM.

Chat with RTX is now available for free download.

Also read: Nvidia CEO: Every country must build its own AI infrastructure