A Microsoft 365 Copilot deployment blueprint should limit sharing sensitive business information with the AI assistant.

This week’s Microsoft Ignite conference focused on the challenge of internal oversharing. Indeed, sharing too much sensitive data poses significant security risks and can clash with corporate guidelines. In theory, this increases the likelihood of data breaches or misuse. Moreover, when sensitive information is shared with generative AI, there is a risk that it could end up outside the organization.

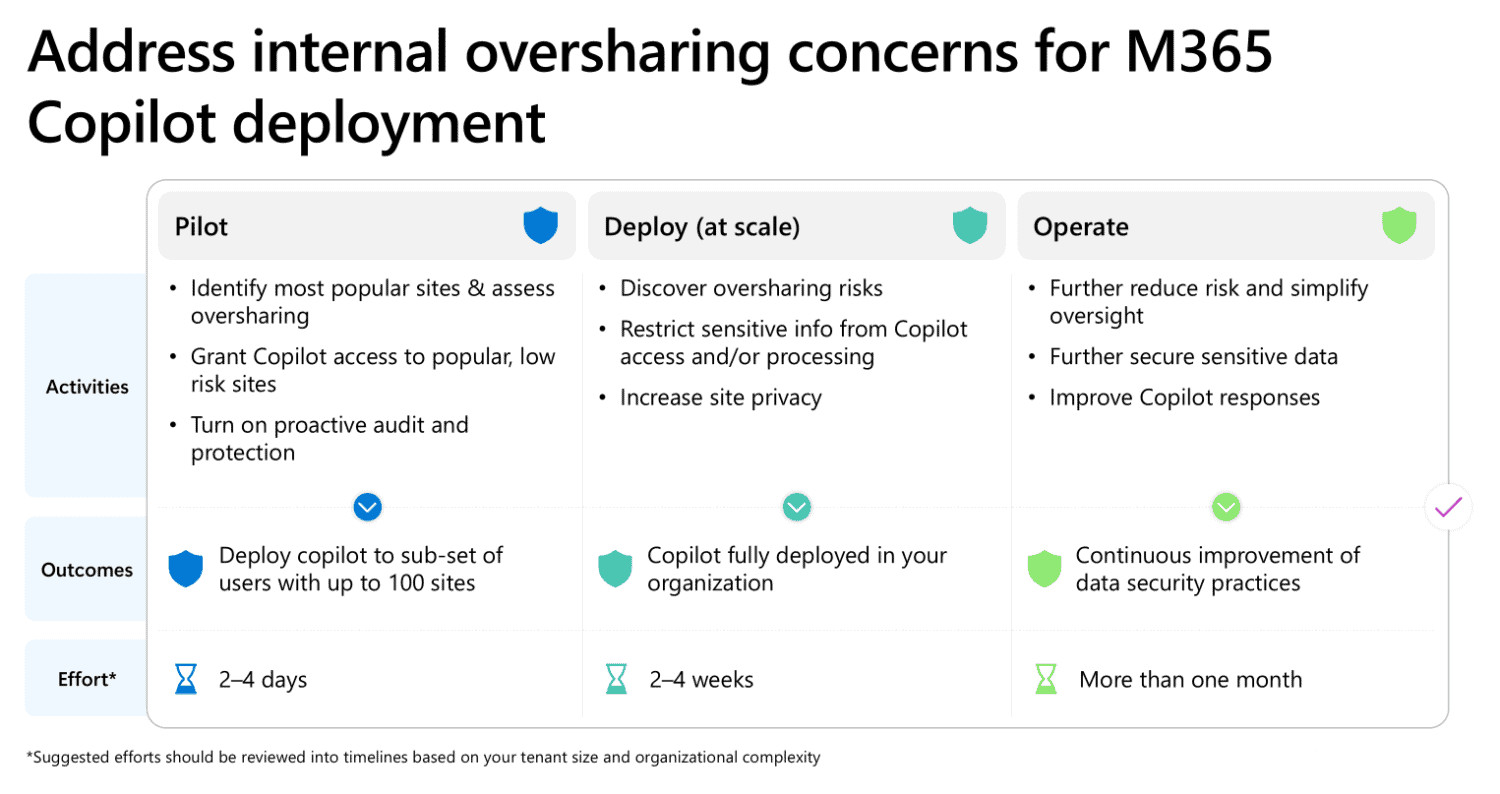

Microsoft’s new blueprint thus aims to counter oversharing by guiding the pilot, implementation, and operational phases of Copilot.

The three phases

In the pilot phase, companies follow steps to set up Microsoft 365 Copilot. The blueprint recommends identifying the most used and potentially at-risk SharePoint sites. Based on this analysis, which sites can or cannot be accessed by Copilot during the testing period can be determined. This process is carried out with a limited group of users so that any adjustments can be made easily.

In the implementation phase, Copilot is rolled out organization-wide. This phase focuses primarily on identifying oversharing risks and restricting access to sensitive information. This helps prevent inadvertent data sharing. Finally, the operational phase focuses on generating regular oversharing reports. These reports allow you to identify potential risks and take corrective action.