NetApp announces new lines of core products in its portfolio a few times a year. Earlier this year we reported on the new AFF A-Series and last year NetApp released affordable C-Series for both AFF and ASA. So before we traveled to NetApp Insight this year, we were expecting a new iteration of the ASA A-Series. That would complete NetApp’s offerings again. We were not disappointed. The ASA A70, ASA A90 and ASA A1K are coming. And that’s not all. Read all about NetApp’s (big) plans for the modern data infrastructure in this article.

The new ASA A-Series consists of the block storage-optimized counterparts of the arrays with the same type numbers from the AFF line, NetApp’s unified offering. Both are intended for the upper end of the market. AFF stands for All-Flash FAS (Fabric-Attached Storage), ASA for All-flash SAN Array. So that makes it immediately clear that the new ASA A-Series is aimed at SAN workflows for which performance plays a crucial role. In other words, so-called mission-critical workloads. These include VMware, Oracle, SAP and SQL applications. ASA is basically a version of AFF. That is, ASA is built on the AFF platform.

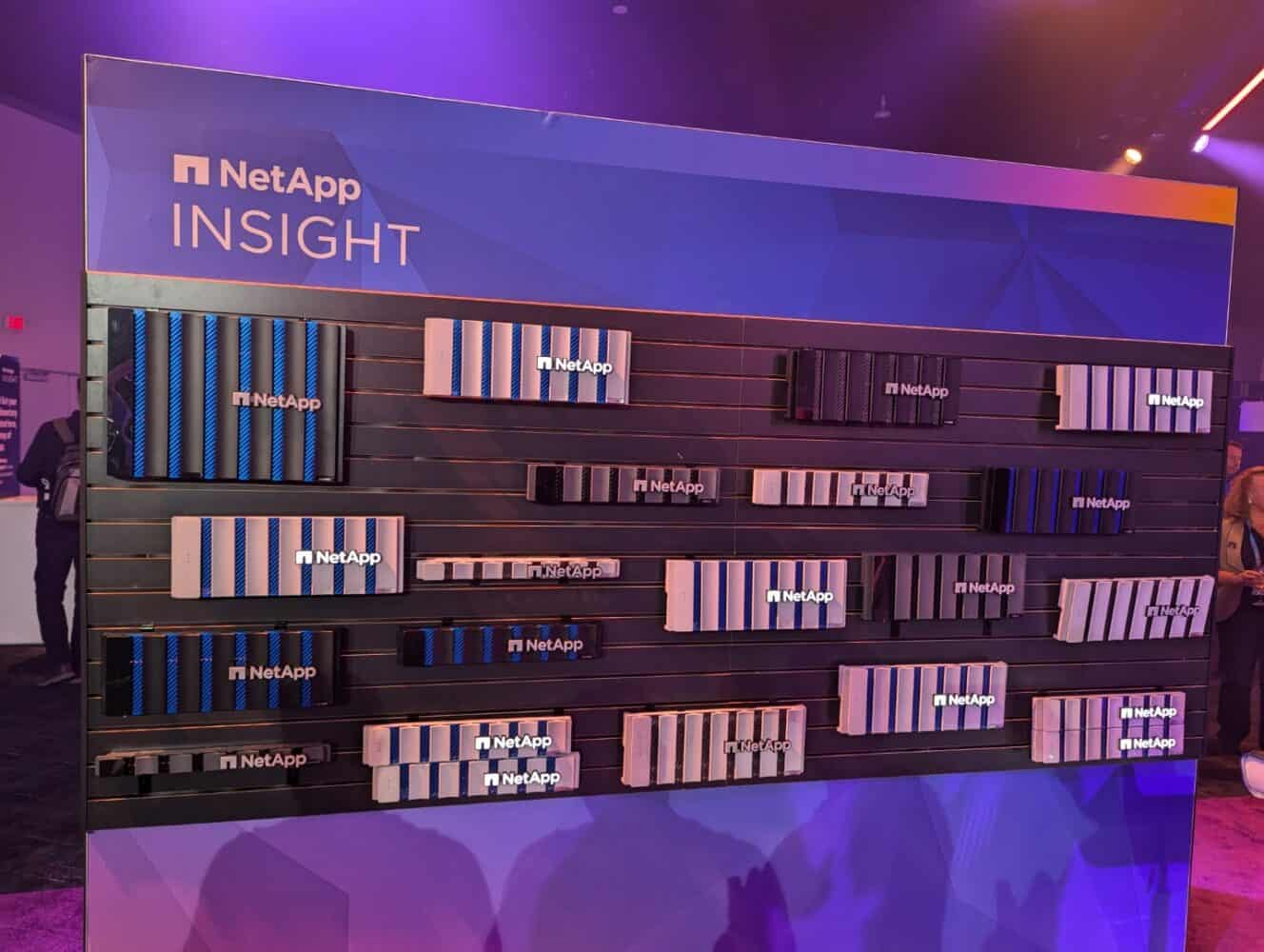

NetApp ASA A70, ASA A90 and ASA A1K

Obviously, the new models in the ASA A-Series again outperform their predecessors. These are not small steps, however, but in some cases double the performance. Furthermore, NetApp guarantees six nines of availability and also promises a lower purchase price and a better TCO (Total Cost of Ownership) than organizations can get from the competition. Here NetApp will undoubtedly have given themselves the benefit of any doubts during the calculations here and there, but it is clear that the company is marketing a very competitively priced product.

Finally, the new ASA A-Series should also be extremely easy to use. According to NetApp, in the past it was often a matter of making compromises, but that is no longer necessary. It used to be the case that block storage was often very simple and lacked the necessary features that were available in unified offerings. That’s not the case anymore. NetApp promises with this new line that it is still easy to use, but also has all the features that organizations expect from high-end storage.

To get a good idea of how the portfolio has evolved over the last year and a half, we recommend you also take a look at the articles below:

- NetApp makes all-flash and AI accessible to more organizations

- NetApp refreshes AFF A Series: all-flash for AI

One platform, one architecture, no silos

The introduction last year of the ASA series (both the A and C lines) was definitely a breakthrough for NetApp. Indeed, it brings storage optimized for block/SAN workflows into the same realms as AFF (and FAS), which can accommodate file and object data formats in addition to block. ASA may be explicitly optimized for block workloads, but uses the same APIs, the same OS (ONTAP) and the same management environment as the other lines.

The fact that there is also a more affordable variant for both “types” (AFF and ASA) with the C-Series further completes the picture. According to Sandeep Singh, SVP and GM Enterprise Storage at NetApp, the C-Series have been a huge success. He calls the C-Series “the fastest ramping products in NetApp’s history”. That history spans over three decades, so that says something.

In addition to responding to market demand for affordable all-flash storage and storage specifically targeted at block workloads, however, something else is apparent. It all runs on the same platform and architecture. That may not seem very interesting at first glance. However, it actually really is, indicates Harv Bhela, NetApp’s Chief Product Officer. “There are no more silos for customers,” he indicates. Silos are rather disastrous when setting up data pipelines, especially now with the rise of (Gen) AI. Multiple silos generally means that data has to be moved. And moving takes time and resources and is therefore not what you want in any ideal scenario.

What are the advantages of a single platform?

So with a single architecture on a single platform, it’s possible to run all sorts of different workloads, in multiple tiers, without much else to worry about. “You can meet all of your needs with NetApp,” Bhela concludes. That may be just a bridge too far for us, but we understand what he means by it. In a year and a half, NetApp has laid out a clear portfolio, with ONTAP as the OS that allows you to get basically everything you need from different types of storage anywhere.

Mind you, NetApp is not talking about the E and EF line when it talks about offering everything on a single platform. That in itself is not surprising, as those lines have a different OS and thus do not fit into this picture. When asked, Singh comments those lines too are still on the market and doing just fine. For the time being, NetApp is certainly not going to remove them from its offering. The same goes for StorageGRID, NetApp’s object store. How these two lines will eventually relate to the ONTAP-driven offerings, is something that the company keeps somewhat in the middle for now.

Much more than just hardware

So with the exception of the E, EF and StorageGRID lines, NetApp’s plans around AFF, ASA and FAS are clear. Basically, they should all become “flavors” that customers can purchase and deploy according to their needs. They can purchase them in several ways, by the way. Directly from NetApp, through a partner, paying for everything upfront immediately or through Keystone, NetApp’s StaaS (Storage-as-a-Service) offering. The latter method has also done very well over the past 12 months, Bhela reports. That’s not really a surprise. A flexible consumption model like Keystone fits the company’s “one platform, one architecture” narrative very well.

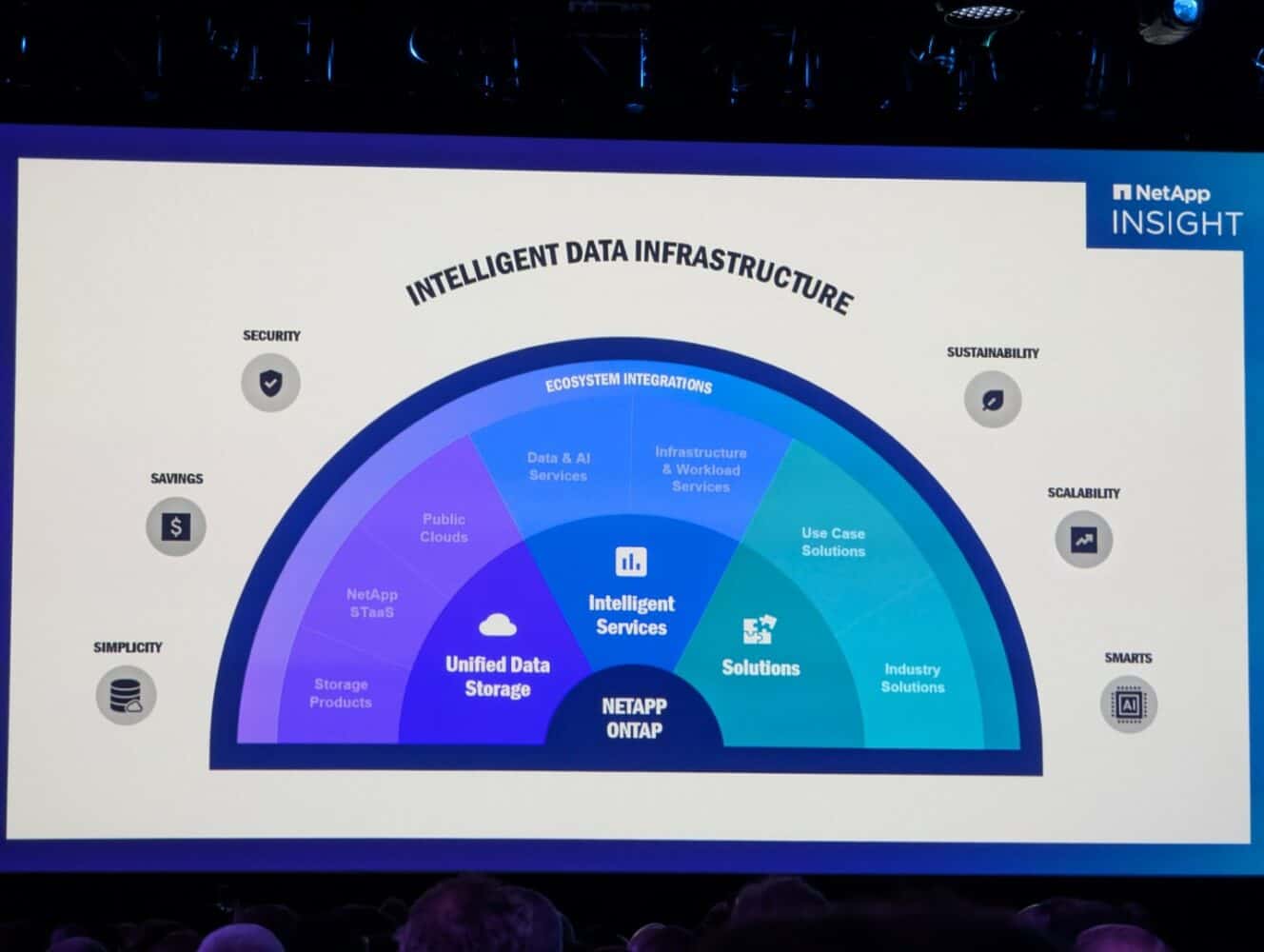

However, it is also important to look beyond the hardware when it comes to NetApp’s platform. The actual storage arrays are the foundation on which NetApp builds everything. The more stable that base is, the more capabilities it can start offering centrally on it.

If NetApp adds something new to the platform, it can immediately become available to the entire portfolio. In Bhela’s words, “If a customer is using the platform, they know that NetApp is going to keep adding value for them.” That could be new series in specific lines that can be easily incorporated into existing environments. However, it is also the expansion into the three largest hyperscalers that NetApp has put a lot of time and effort into, and the anti-ransomware protection offering that it makes generally available today.

Intelligent Data Infrastructure for AI

All in all, NetApp seems to be in good shape, especially after today’s update to the ASA A-Series. The speed at which the company has been building out its portfolio over the last year and a half, and the deep partnerships it has with Microsoft Azure (Azure NetApp Files) Google Cloud (Google Cloud NetApp Volumes) and Amazon Web Services (Amazon FSx for NetApp ONTAP), mean that the hybrid data foundation is more or less complete (at least for now). According to Bhela, “Nobody else in the storage industry has delivered it more than NetApp.” We would say the same if we worked for NetApp, of course. Still, it is undeniably true that NetApp seems to have the momentum it needs right now.”NetApp’s on a roll,” as Bhela sums it up succinctly.

The next step in NetApp’s story is the so-called Intelligent Data Infrastructure. This is especially crucial in the age of AI for organizations to stay in the game. That is why NetApp will continue to create the necessary Intelligent Services in addition to the services that already exist. It has already put the CloudOps business unit under the Intelligent Services umbrella, for example.

ONTAP remains the glue and will become even more important

Developments around ONTAP, which is the glue that holds NetApp’s offerings together, are not standing still either. If all goes well, ONTAP will soon receive Nvidia DGX SuperPOD AI infrastructure certification for its AFF A90 arrays. As a result, this AI infrastructure will also become part of the Intelligent Data Infrastructure. NetApp is also moving (even) more toward a disaggregated architecture for ONTAP environments. This means that storage and compute will be decoupled from each other. It will make the storage that is available more accessible for things like training LLMs.

One last thing that caught our eye in this week’s announcements at NetApp Insight is that the company has created a global metadata namespace. This should make it possible to manage data in a secure and compliant way, across a hybrid multi-cloud environment. Global namespaces are becoming increasingly popular. Some much newer players like Hammerspace and VAST Data are also getting involved. That will be an interesting development to follow in the near future. For StorageGRID, NetApp already has something resembling a global namespace as far as we know, so perhaps it can make quick strides here as well.

NetApp is looking forward to the future. That is clear based on NetApp Insight 2024 and the conversations we are having here. It would be a bad thing if it didn’t at its own event of course. Still, we taste more conviction in the conversations we are having than in conversations we had in previous years. The company as a whole has made significant strides in the right direction, is the impression we get. NetApps narrative also makes sense as far as we are concerned. Now it’s a matter of executing the plans in the right way. We’re certainly going to keep an eye on that over the next few years.

We have already provided quite a bit of information in this article about what NetApp is working on. Here are a few additional sources, for those who want to read up a bit more:

- NetApp BlueXP is a big step towards true hybrid cloud data management

- NetApp and AWS strengthen partnership to ease GenAI adoption